AlphaGo versus Lee Sedol

[15] Elon Musk, an early investor of Deepmind, said in 2016 that experts in the field thought AI was 10 years away from achieving a victory against a top professional Go player.

By using neural networks and Monte Carlo tree search, AlphaGo calculates colossal numbers of likely and unlikely probabilities many moves into the future [citation needed].

[22][23] Canadian AI specialist Jonathan Schaeffer, commenting after the win against Fan, compared AlphaGo with a "child prodigy" that lacked experience, and considered, "the real achievement will be when the program plays a player in the true top echelon."

[20] In the aftermath of his match against AlphaGo, Fan Hui noted that the game had taught him to be a better player and to see things he had not previously seen.

[24] Go experts found errors in AlphaGo's play against Fan, particularly relating to a lack of awareness of the entire board.

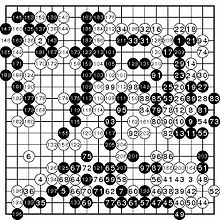

[39][40][41] Aja Huang, a DeepMind team member and amateur 6-dan Go player, placed stones on the Go board for AlphaGo, which ran through the Google Cloud Platform with its server located in the United States.

[46] Professional Go player Cho Hanseung commented that AlphaGo's game had greatly improved from when it beat Fan Hui in October 2015.

[50] One of the creators of AlphaGo, Demis Hassabis, said that the system was confident of victory from the midway point of the game, even though the professional commentators could not tell which player was ahead.

[30] An Younggil (8p) called AlphaGo's move 37 "a rare and intriguing shoulder hit" but said Lee's counter was "exquisite".

He stated that control passed between the players several times before the endgame, and especially praised AlphaGo's moves 151, 157, and 159, calling them "brilliant".

[51] AlphaGo showed anomalies and moves from a broader perspective, which professional Go players described as looking like mistakes at first sight but an intentional strategy in hindsight.

An Younggil said, "So when AlphaGo plays a slack looking move, we may regard it as a mistake, but perhaps it should more accurately be viewed as a declaration of victory?

Lee chose to play a type of extreme strategy, known as amashi, in response to AlphaGo's apparent preference for Souba Go (attempting to win by many small gains when the opportunity arises), taking territory at the perimeter rather than the center.

[57] By doing so, his apparent aim was to force an "all or nothing" style of situation – a possible weakness for an opponent strong at negotiation types of play, and one which might make AlphaGo's capability of deciding slim advantages largely irrelevant.

In the early game, Lee concentrated on taking territory in the edges and corners of the board, allowing AlphaGo to gain influence in the top and centre.

Humans are taught to recognize the specific pattern, but it is a long sequence of moves, made difficult if computed from scratch.

AlphaGo then started to develop the top of the board and the centre and defended successfully against an attack by Lee in moves 69 to 81 that David Ormerod characterised as over-cautious.

By white 90, AlphaGo had regained equality and then played a series of moves described by Ormerod as "unusual... but subtly impressive", which gained a slight advantage.

An Younggil noted white moves 154, 186, and 194 as being particularly strong, and the program played an impeccable endgame, maintaining its lead until Lee resigned.

[65] Chinese-language coverage of game 1 with commentary by 9-dan players Gu Li and Ke Jie was provided by Tencent and LeTV respectively, reaching about 60 million viewers.

Deep Blue's Murray Campbell called AlphaGo's victory "the end of an era... board games are more or less done and it's time to move on.

"[68] When compared with Deep Blue or with Watson, AlphaGo's underlying algorithms are potentially more general-purpose and may be evidence that the scientific community is making progress toward artificial general intelligence.

[74] Some commentators believe AlphaGo's victory makes for a good opportunity for society to start discussing preparations for the possible future impact of machines with general purpose intelligence.

"[78] The DeepMind AlphaGo Team received the Inaugural IJCAI Marvin Minsky Medal for Outstanding Achievements in AI.

"AlphaGo is a wonderful achievement, and a perfect example of what the Minsky Medal was initiated to recognise", said Professor Michael Wooldridge, Chair of the IJCAI Awards Committee.

"[68] AlphaGo appeared to have unexpectedly become much stronger, even when compared with its October 2015 match against Fan Hui[80] where a computer had beaten a Go professional for the first time without the advantage of a handicap.

Toby Manning, the referee of AlphaGo's match against Fan Hui, and Hajin Lee, secretary general of the International Go Federation, both reason that in the future, Go players will get help from computers to learn what they have done wrong in games and improve their skills.

[77][85] Lee said his eventual loss to a machine was "inevitable" but stated that "robots will never understand the beauty of the game the same way that we humans do.

"[85] In response to the match the South Korean government announced on 17 March 2016 that it would invest 1 trillion won (US$863 million) in artificial-intelligence (AI) research over the next five years.

[90][91] Official match commentary by Michael Redmond (9-dan pro) and Chris Garlock on Google DeepMind's YouTube channel: