Amdahl's law

The law can be stated as: "the overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used".

[2]It is named after computer scientist Gene Amdahl, and was presented at the American Federation of Information Processing Societies (AFIPS) Spring Joint Computer Conference in 1967.

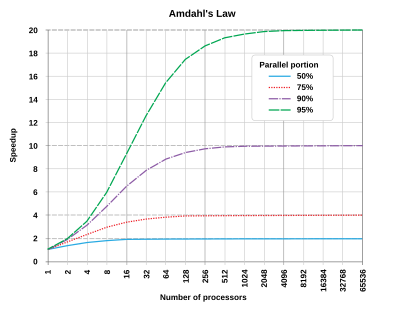

Amdahl's law is often used in parallel computing to predict the theoretical speedup when using multiple processors.

For instance, if a programmer enhances a part of the code that represents 10% of the total execution time (i.e.

In another example, if the programmer optimizes a section that accounts for 99% of the execution time (i.e.

In practice, as more computing resources become available, they tend to get used on larger problems (larger datasets), and the time spent in the parallelizable part often grows much faster than the inherently serial work.

In this case, Gustafson's law gives a less pessimistic and more realistic assessment of the parallel performance.

[10] Universal Scalability Law (USL), developed by Neil J. Gunther, extends the Amdahl's law and accounts for the additional overhead due to inter-process communication.

USL quantifies scalability based on parameters such as contention and coherency.

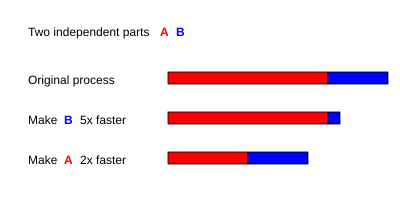

[11] A task executed by a system whose resources are improved compared to an initial similar system can be split up into two parts: An example is a computer program that processes files.

A part of that program may scan the directory of the disk and create a list of files internally in memory.

After that, another part of the program passes each file to a separate thread for processing.

The execution time of the whole task before the improvement of the resources of the system is denoted as

The fraction of the execution time of the task that would benefit from the improvement of the resources is denoted by

Then: It is the execution of the part that benefits from the improvement of the resources that is accelerated by the factor

, which yields If 30% of the execution time may be the subject of a speedup, p will be 0.3; if the improvement makes the affected part twice as fast, s will be 2.

Amdahl's law states that the overall speedup of applying the improvement will be: For example, assume that we are given a serial task which is split into four consecutive parts, whose percentages of execution time are p1 = 0.11, p2 = 0.18, p3 = 0.23, and p4 = 0.48 respectively.

The percentage improvement in speed can be calculated as If the non-parallelizable part is optimized by a factor of

, then It follows from Amdahl's law that the speedup due to parallelism is given by When

, meaning that the speedup is measured with respect to the execution time after the non-parallelizable part is optimized.

Amdahl's law does represent the law of diminishing returns if one is considering what sort of return one gets by adding more processors to a machine, if one is running a fixed-size computation that will use all available processors to their capacity.

Each new processor added to the system will add less usable power than the previous one.

Each time one doubles the number of processors the speedup ratio will diminish, as the total throughput heads toward the limit of 1/(1 − p).

An implication of Amdahl's law is that to speed up real applications which have both serial and parallel portions, heterogeneous computing techniques are required.

These modelling methods aim to predict system power efficiency and performance ranges, and facilitates research and development at the hardware and system software levels.