Array (data structure)

An array is stored such that the position of each element can be computed from its index tuple by a mathematical formula.

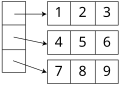

In most modern computers and many external storage devices, the memory is a one-dimensional array of words, whose indices are their addresses.

Among other things, this feature allows a single iterative statement to process arbitrarily many elements of an array.

John von Neumann wrote the first array-sorting program (merge sort) in 1945, during the building of the first stored-program computer.

Some mainframes designed in the 1960s, such as the Burroughs B5000 and its successors, used memory segmentation to perform index-bounds checking in hardware.

The earliest high-level programming languages, including FORTRAN (1957), Lisp (1958), COBOL (1960), and ALGOL 60 (1960), had support for multi-dimensional arrays, and so has C (1972).

Many databases, small and large, consist of (or include) one-dimensional arrays whose elements are records.

Arrays are used to implement other data structures, such as lists, heaps, hash tables, deques, queues, stacks, strings, and VLists.

Arrays can be used to determine partial or complete control flow in programs, as a compact alternative to (otherwise repetitive) multiple IF statements.

The number of indices needed to specify an element is called the dimension, dimensionality, or rank of the array.

Accessing its elements involves a single subscript which can either represent a row or column index.

For this reason, the C programming language specifies that array indices always begin at 0; and many programmers will call that element "zeroth" rather than "first".

The coefficients ck must be chosen so that every valid index tuple maps to the address of a distinct element.

[2][3] The size of each element, and the minimum and maximum values allowed for each index may also be included in the dope vector.

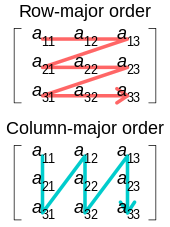

A programmer (or a sophisticated compiler) may use this information to choose between row- or column-major layout for each array.

Static arrays have a size that is fixed when they are created and consequently do not allow elements to be inserted or removed.

If this operation is done infrequently, insertions at the end of the array require only amortized constant time.

For a compact two-dimensional triangular array, for instance, the addressing formula is a polynomial of degree 2.

This is roughly a factor of B/k better than the number of cache misses needed to access n elements at random memory locations.

As a consequence, sequential iteration over an array is noticeably faster in practice than iteration over many other data structures, a property called locality of reference (this does not mean however, that using a perfect hash or trivial hash within the same (local) array, will not be even faster - and achievable in constant time).

The speedup of such optimized routines varies by array element size, architecture, and implementation.

Associative arrays provide a mechanism for array-like functionality without huge storage overheads when the index values are sparse.

Thus an element in row i and column j of an array A would be accessed by double indexing (A[i][j] in typical notation).

This alternative structure allows jagged arrays, where each row may have a different size—or, in general, where the valid range of each index depends on the values of all preceding indices.

Thus, if the array is seen as a function on a set of possible index combinations, it is the dimension of the space of which its domain is a discrete subset.