Cache coherence

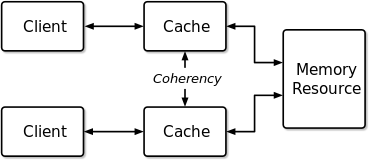

Cache coherence is the discipline which ensures that the changes in the values of shared operands (data) are propagated throughout the system in a timely fashion.

[4] Coherence defines the behavior of reads and writes to a single address location.

To illustrate this better, consider the following example: A multi-processor system consists of four processors - P1, P2, P3 and P4, all containing cached copies of a shared variable S whose initial value is 0.

Therefore, in order to satisfy Transaction Serialization, and hence achieve Cache Coherence, the following condition along with the previous two mentioned in this section must be met: The alternative definition of a coherent system is via the definition of sequential consistency memory model: "the cache coherent system must appear to execute all threads’ loads and stores to a single memory location in a total order that respects the program order of each thread".

Another definition is: "a multiprocessor is cache consistent if all writes to the same memory location are performed in some sequential order".

The two most common mechanisms of ensuring coherency are snooping and directory-based, each having their own benefits and drawbacks.

[8] Snooping based protocols tend to be faster, if enough bandwidth is available, since all transactions are a request/response seen by all processors.

Typically, early systems used directory-based protocols where a directory would keep a track of the data being shared and the sharers.

In snoopy protocols, the transaction requests (to read, write, or upgrade) are sent out to all processors.