Centipede game

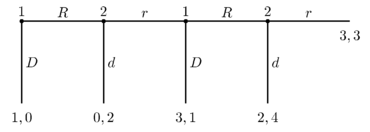

In game theory, the centipede game, first introduced by Robert Rosenthal in 1981, is an extensive form game in which two players take turns choosing either to take a slightly larger share of an increasing pot, or to pass the pot to the other player.

The unique subgame perfect equilibrium (and every Nash equilibrium) of these games results in the first player taking the pot on the first round of the game; however, in empirical tests, relatively few players do so, and as a result, achieve a higher payoff than in the subgame perfect and Nash equilibria.

One possible version of a centipede game could be played as follows: Consider two players: Alice and Bob.

The game continues for a fixed number of rounds or until a player decides to end the game by pocketing a pile of coins.The addition of coins is taken to be an externality, as it is not contributed by either player.

Standard game theoretic tools predict that the first player will defect on the first round, taking the pile of coins for himself.

In the unique subgame perfect equilibrium, each player chooses to defect at every opportunity.

In the Nash equilibria, however, the actions that would be taken after the initial choice opportunities (even though they are never reached since the first player defects immediately) may be cooperative.

This reasoning proceeds backwards through the game tree until one concludes that the best action is for the first player to defect in the first round.

If we were to reach the last round of the game, Player 2 would do better by choosing d instead of r, receiving 4 coins instead of 3.

This means that strategies that are cooperative in the never-reached later rounds of the game could still be in a Nash equilibrium.

Instead, subjects regularly show partial cooperation, playing "R" (or "r") for several moves before eventually choosing "D" (or "d").

For examples see McKelvey and Palfrey (1992), Nagel and Tang (1998) or Krockow et al. (2016)[1] for a survey.

[2] Since the empirical studies have produced results that are inconsistent with the traditional equilibrium analysis, several explanations of this behavior have been offered.

McKelvey and Palfrey (1992) create a model with some altruistic agents and some rational agents who will end up playing a mixed strategy (i.e. they play at multiple nodes with some probability).

Some experiments tried to see whether players who passing a lot would also be the most altruistic agents in other games or other life situations (see for instance Pulford et al[4] or Gamba and Regner (2019)[5] who assessed Social Value Orientation).

Players passing a lot were indeed more altruistic but the difference wasn't huge.

Rosenthal (1981) suggested that if one has reason to believe his opponent will deviate from Nash behavior, then it may be advantageous to not defect on the first round.

If there is a significant possibility of error in action, perhaps because your opponent has not reasoned completely through the backward induction, it may be advantageous (and rational) to cooperate in the initial rounds.

The quantal response equilibrium of McKelvey and Palfrey (1995)[6] created a model with agents playing Nash equilibrium with errors and they applied it to the Centipede game.

However, Parco, Rapoport and Stein (2002) illustrated that the level of financial incentives can have a profound effect on the outcome in a three-player game: the larger the incentives are for deviation, the greater propensity for learning behavior in a repeated single-play experimental design to move toward the Nash equilibrium.

Palacios-Huerta and Volij (2009) find that expert chess players play differently from college students.

With a rising Elo, the probability of continuing the game declines; all Grandmasters in the experiment stopped at their first chance.

They conclude that chess players are familiar with using backward induction reasoning and hence need less learning to reach the equilibrium.

However, in an attempt to replicate these findings, Levitt, List, and Sadoff (2010) find strongly contradictory results, with zero of sixteen Grandmasters stopping the game at the first node.

Qualitative research by Krockow et al., which employed think-aloud protocols that required players in a Centipede game to vocalise their reasoning during the game, indicated a range of decision biases such as action bias or completion bias, which may drive irrational choices in the game.

[8] Like the prisoner's dilemma, this game presents a conflict between self-interest and mutual benefit.

Additionally, Binmore (2005) has argued that some real-world situations can be described by the Centipede game.

Another example Binmore (2005) likens to the Centipede game is the mating behavior of a hermaphroditic sea bass which takes turns exchanging eggs to fertilize.

Since the payoffs for some amount of cooperation in the Centipede game are so much larger than immediate defection, the "rational" solutions given by backward induction can seem paradoxical.

This, coupled with the fact that experimental subjects regularly cooperate in the Centipede game, has prompted debate over the usefulness of the idealizations involved in the backward induction solutions, see Aumann (1995, 1996) and Binmore (1996).