Ceph (software)

Ceph is highly available and ensures strong data durability through techniques including replication, erasure coding, snapshots and clones.

[15][16] Ceph employs five distinct kinds of daemons:[17] All of these are fully distributed, and may be deployed on disjoint, dedicated servers or in a converged topology.

[21] Ceph distributes data across multiple storage devices and nodes to achieve higher throughput, in a fashion similar to RAID.

[22] As of September 2017[update], BlueStore is the default and recommended storage back end for production environments,[23] which provides better latency and configurability than the older Filestore back end, and avoiding the shortcomings of filesystem based storage involving additional processing and caching layers.

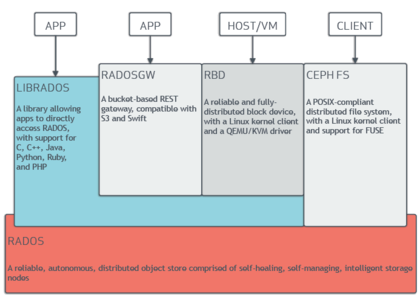

[26] Ceph's software libraries provide client applications with direct access to the reliable autonomic distributed object store (RADOS) object-based storage system.

The RADOS Gateway also exposes the object store as a RESTful interface which can present as both native Amazon S3 and OpenStack Swift APIs.

Block storage clients often require high throughput and IOPS, thus Ceph RBD deployments increasingly utilize SSDs with NVMe interfaces.

"Ceph-iSCSI" is a gateway which enables access to distributed, highly available block storage from Microsoft Windows and VMware vSphere servers or clients capable of speaking the iSCSI protocol.

Modern storage devices and interfaces including NVMe and 3D XPoint have become much faster than HDD and even SAS/SATA SSDs, but CPU performance has not kept pace.

While Crimson can work with the BlueStore back end (via AlienStore), a new native ObjectStore implementation called SeaStore is also being developed along with CyanStore for testing purposes.

One reason for creating SeaStore is that transaction support in the BlueStore back end is provided by RocksDB, which needs to be re-implemented to achieve better parallelism.

[35] In 2005, as part of a summer project initiated by Scott A. Brandt and led by Carlos Maltzahn, Sage Weil created a fully functional file system prototype which adopted the name Ceph.

[37] After his graduation in autumn 2007, Weil continued to work on Ceph full-time, and the core development team expanded to include Yehuda Sadeh Weinraub and Gregory Farnum.

[4] The name "Ceph" is a shortened form of "cephalopod", a class of molluscs that includes squids, cuttlefish, nautiloids, and octopuses.

The name (emphasized by the logo) suggests the highly parallel behavior of an octopus and was chosen to associate the file system with "Sammy", the banana slug mascot of UCSC.