Error correction code

[5] FEC can be applied in situations where re-transmissions are costly or impossible, such as one-way communication links or when transmitting to multiple receivers in multicast.

Long-latency connections also benefit; in the case of satellites orbiting distant planets, retransmission due to errors would create a delay of several hours.

The Viterbi decoder implements a soft-decision algorithm to demodulate digital data from an analog signal corrupted by noise.

Many FEC decoders can also generate a bit-error rate (BER) signal which can be used as feedback to fine-tune the analog receiving electronics.

FEC information is added to mass storage (magnetic, optical and solid state/flash based) devices to enable recovery of corrupted data, and is used as ECC computer memory on systems that require special provisions for reliability.

This establishes bounds on the theoretical maximum information transfer rate of a channel with some given base noise level.

After years of research, some advanced FEC systems like polar code[3] come very close to the theoretical maximum given by the Shannon channel capacity under the hypothesis of an infinite length frame.

[7] Classical block codes are usually decoded using hard-decision algorithms,[9] which means that for every input and output signal a hard decision is made whether it corresponds to a one or a zero bit.

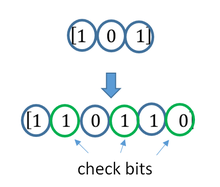

[10] The fundamental principle of ECC is to add redundant bits in order to help the decoder to find out the true message that was encoded by the transmitter.

On the other extreme, not using any ECC (i.e., a code-rate equal to 1) uses the full channel for information transfer purposes, at the cost of leaving the bits without any additional protection.

One interesting question is the following: how efficient in terms of information transfer can an ECC be that has a negligible decoding error rate?

The upper bound given by Shannon's work inspired a long journey in designing ECCs that can come close to the ultimate performance boundary.

Usually, this optimization is done in order to achieve a low decoding error probability while minimizing the impact to the data rate.

Concatenated codes have been standard practice in satellite and deep space communications since Voyager 2 first used the technique in its 1986 encounter with Uranus.

The Galileo craft used iterative concatenated codes to compensate for the very high error rate conditions caused by having a failed antenna.

They can provide performance very close to the channel capacity (the theoretical maximum) using an iterated soft-decision decoding approach, at linear time complexity in terms of their block length.

One of the earliest commercial applications of turbo coding was the CDMA2000 1x (TIA IS-2000) digital cellular technology developed by Qualcomm and sold by Verizon Wireless, Sprint, and other carriers.

This can make sense in a streaming setting, where codewords are too large to be classically decoded fast enough and where only a few bits of the message are of interest for now.

[19][20] Interleaving is frequently used in digital communication and storage systems to improve the performance of forward error correcting codes.

Interleaving alleviates this problem by shuffling source symbols across several code words, thereby creating a more uniform distribution of errors.

With interleaving: In each of the codewords "aaaa", "eeee", "ffff", and "gggg", only one bit is altered, so one-bit error-correcting code will decode everything correctly.

For instance in the 5G, the software ECCs could be located in the cloud and the antennas connected to this computing resources: improving this way the flexibility of the communication network and eventually increasing the energy efficiency of the system.