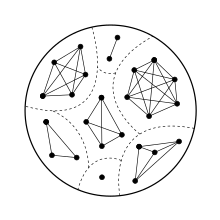

Component (graph theory)

The components of a graph can be constructed in linear time, and a special case of the problem, connected-component labeling, is a basic technique in image analysis.

Dynamic connectivity algorithms maintain components as edges are inserted or deleted in a graph, in low time per change.

In computational complexity theory, connected components have been used to study algorithms with limited space complexity, and sublinear time algorithms can accurately estimate the number of components.

[2] Additional examples include the following special cases: Another definition of components involves the equivalence classes of an equivalence relation defined on the graph's vertices.

, or equivalently a walk (a path allowing repeated vertices and edges).

The components are then the induced subgraphs formed by each of these equivalence classes.

[7] Alternatively, some sources define components as the sets of vertices rather than as the subgraphs they induce.

The rank of the dual cographic matroid equals the circuit rank of the graph, the minimum number of edges that must be removed from the graph to break all its cycles.

[12] A graph can be interpreted as a topological space in multiple ways, for instance by placing its vertices as points in general position in three-dimensional Euclidean space and representing its edges as line segments between those points.

[13] The components of a graph can be generalized through these interpretations as the topological connected components of the corresponding space; these are equivalence classes of points that cannot be separated by pairs of disjoint closed sets.

[3] The number of components arises in other ways in graph theory as well.

[15] Numbers of components play a key role in the Tutte theorem characterizing finite graphs that have perfect matchings[16] and the associated Tutte–Berge formula for the size of a maximum matching,[17] and in the definition of graph toughness.

[18] It is straightforward to compute the components of a finite graph in linear time (in terms of the numbers of the vertices and edges of the graph) using either breadth-first search or depth-first search.

All components of a graph can be found by looping through its vertices, starting a new breadth-first or depth-first search whenever the loop reaches a vertex that has not already been included in a previously found component.

Hopcroft & Tarjan (1973) describe essentially this algorithm, and state that it was already "well known".

[19] Connected-component labeling, a basic technique in computer image analysis, involves the construction of a graph from the image and component analysis on the graph.

The vertices are the subset of the pixels of the image, chosen as being of interest or as likely to be part of depicted objects.

Edges connect adjacent pixels, with adjacency defined either orthogonally according to the Von Neumann neighborhood, or both orthogonally and diagonally according to the Moore neighborhood.

Identifying the connected components of this graph allows additional processing to find more structure in those parts of the image or identify what kind of object is depicted.

Researchers have developed component-finding algorithms specialized for this type of graph, allowing it to be processed in pixel order rather than in the more scattered order that would be generated by breadth-first or depth-first searching.

[20] There are also efficient algorithms to dynamically track the components of a graph as vertices and edges are added, by using a disjoint-set data structure to keep track of the partition of the vertices into equivalence classes, replacing any two classes by their union when an edge connecting them is added.

[21] One application of this sort of incremental connectivity algorithm is in Kruskal's algorithm for minimum spanning trees, which adds edges to a graph in sorted order by length and includes an edge in the minimum spanning tree only when it connects two different components of the previously-added subgraph.

per connectivity query,[23] or in near-logarithmic randomized expected time.

[24] Components of graphs have been used in computational complexity theory to study the power of Turing machines that have a working memory limited to a logarithmic number of bits, with the much larger input accessible only through read access rather than being modifiable.

The problems that can be solved by machines limited in this way define the complexity class L. It was unclear for many years whether connected components could be found in this model, when formalized as a decision problem of testing whether two vertices belong to the same component, and in 1982 a related complexity class, SL, was defined to include this connectivity problem and any other problem equivalent to it under logarithmic-space reductions.

[25] It was finally proven in 2008 that this connectivity problem can be solved in logarithmic space, and therefore that SL = L.[26] In a graph represented as an adjacency list, with random access to its vertices, it is possible to estimate the number of connected components, with constant probability of obtaining additive (absolute) error at most

In the same model of random graphs, there will exist multiple connected components with high probability for values of

This phenomenon is closely related to the coupon collector's problem: in order to be connected, a random graph needs enough edges for each vertex to be incident to at least one edge.

More precisely, if random edges are added one by one to a graph, then with high probability the first edge whose addition connects the whole graph touches the last isolated vertex.

[32] For different models including the random subgraphs of grid graphs, the connected components are described by percolation theory.