Convolutional code

The sliding application represents the 'convolution' of the encoder over the data, which gives rise to the term 'convolutional coding'.

The ability to perform economical maximum likelihood soft decision decoding is one of the major benefits of convolutional codes.

This is in contrast to classic block codes, which are generally represented by a time-variant trellis and therefore are typically hard-decision decoded.

The memory is often called the "constraint length" K, where the output is a function of the current input as well as the previous

The performance of a punctured convolutional code generally scales well with the amount of parity transmitted.

It was thought that convolutional codes could be decoded with arbitrary quality at the expense of computation and delay.

Recursive systematic convolutional codes were invented by Claude Berrou around 1991.

Convolutional codes are used extensively to achieve reliable data transfer in numerous applications, such as digital video, radio, mobile communications (e.g., in GSM, GPRS, EDGE and 3G networks (until 3GPP Release 7)[3][4]) and satellite communications.

Prior to turbo codes such constructions were the most efficient, coming closest to the Shannon limit.

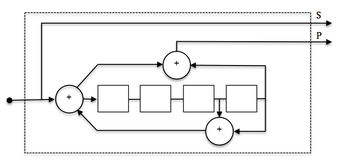

To convolutionally encode data, start with k memory registers, each holding one input bit.

Using the generator polynomials and the existing values in the remaining registers, the encoder outputs n symbols.

Codes with output symbols that do not include the input data are called non-systematic.

A convolutional encoder is called so because it performs a convolution of the input stream with the encoder's impulse responses: where x is an input sequence, yj is a sequence from output j, hj is an impulse response for output j and

Every output of an encoder can be described by its own transfer function, which is closely related to the generator polynomial.

All possible transitions can be shown as below: An actual encoded sequence can be represented as a path on this graph.

This diagram gives us an idea about decoding: if a received sequence doesn't fit this graph, then it was received with errors, and we must choose the nearest correct (fitting the graph) sequence.

It can be calculated as Since a convolutional code doesn't use blocks, processing instead a continuous bitstream, the value of t applies to a quantity of errors located relatively near to each other.

Free distance can be interpreted as the minimal length of an erroneous "burst" at the output of a convolutional decoder.

The popular solution for this problem is to interleave data before convolutional encoding, so that the outer block (usually Reed–Solomon) code can correct most of the errors.

For relatively small values of k, the Viterbi algorithm is universally used as it provides maximum likelihood performance and is highly parallelizable.

Viterbi decoders are thus easy to implement in VLSI hardware and in software on CPUs with SIMD instruction sets.

Both Viterbi and sequential decoding algorithms return hard decisions: the bits that form the most likely codeword.

An approximate confidence measure can be added to each bit by use of the Soft output Viterbi algorithm.

Maximum a posteriori (MAP) soft decisions for each bit can be obtained by use of the BCJR algorithm.

In fact, predefined convolutional codes structures obtained during scientific researches are used in the industry.

This relates to the possibility to select catastrophic convolutional codes (causes larger number of errors).

An especially popular Viterbi-decoded convolutional code, used at least since the Voyager program, has a constraint length K of 7 and a rate r of 1/2.

The convolutional code with a constraint length of 2 and a rate of 1/2 is used in GSM as an error correction technique.

The specific order of transmission is defined by the respective communication standard.

Punctured convolutional codes are widely used in the satellite communications, for example, in Intelsat systems and Digital Video Broadcasting.

![{\displaystyle h^{1}=171_{o}=[1111001]_{b}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1691b8f48b9ce15cbca503b5205617f49cf91312)

![{\displaystyle h^{2}=133_{o}=[1011011]_{b}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fffca9507c72d521bdeef60a5375cdd71d7b0d6d)