Corner detection

Corner detection is an approach used within computer vision systems to extract certain kinds of features and infer the contents of an image.

Corner detectors are not usually very robust and often require large redundancies introduced to prevent the effect of individual errors from dominating the recognition task.

The similarity is measured by taking the sum of squared differences (SSD) between the corresponding pixels of two patches.

The corner strength is defined as the smallest SSD between the patch and its neighbours (horizontal, vertical and on the two diagonals).

The reason is that if this number is high, then the variation along all shifts is either equal to it or larger than it, so capturing that all nearby patches look different.

, such that This produces the approximation which can be written in matrix form: where A is the structure tensor, In words, we find the covariance of the partial derivative of the image intensity

is a tunable sensitivity parameter: Therefore, the algorithm[6] does not have to actually compute the eigenvalue decomposition of the matrix

The algorithm relies on the fact that for an ideal corner, tangent lines cross at a single point.

and define the multi-scale Harris corner measure as Concerning the choice of the local scale parameter

[16][17] A differential way to detect such points is by computing the rescaled level curve curvature (the product of the level curve curvature and the gradient magnitude raised to the power of three) and to detect positive maxima and negative minima of this differential expression at some scale

[11] These scale-invariant interest points are all extracted by detecting scale-space extrema of scale-normalized differential expressions, i.e., points in scale-space where the corresponding scale-normalized differential expressions assume local extrema with respect to both space and scale[11] where

To improve the corner detection ability of the differences of Gaussians detector, the feature detector used in the SIFT[20] system therefore uses an additional post-processing stage, where the eigenvalues of the Hessian of the image at the detection scale are examined in a similar way as in the Harris operator.

If the ratio of the eigenvalues is too high, then the local image is regarded as too edge-like, so the feature is rejected.

[21] The scale-normalized determinant of the Hessian operator (Lindeberg 1994, 1998)[11][12] is on the other hand highly selective to well localized image features and does only respond when there are significant grey-level variations in two image directions[11][14] and is in this and other respects a better interest point detector than the Laplacian of the Gaussian.

[21][22] Experimentally this implies that determinant of the Hessian interest points have better repeatability properties under local image deformation than Laplacian interest points, which in turns leads to better performance of image-based matching in terms higher efficiency scores and lower 1−precision scores.

, as can e.g. be manifested in terms of their similar transformation properties under affine image deformations[13][21] Lindeberg (2013, 2015)[21][22] proposed to define four feature strength measures from the Hessian matrix in related ways as the Harris and Shi-and-Tomasi operators are defined from the structure tensor (second-moment matrix).

responds to local extrema by positive values and is not sensitive to saddle points, whereas the signed Hessian feature strength measure

is insensitive to the local polarity of the signal, whereas the signed Hessian feature strength measure

Furthermore, it was shown that all these differential scale-space interest point detectors defined from the Hessian matrix allow for the detection of a larger number of interest points and better matching performance compared to the Harris and Shi-and-Tomasi operators defined from the structure tensor (second-moment matrix).

[21] The interest points obtained from the multi-scale Harris operator with automatic scale selection are invariant to translations, rotations and uniform rescalings in the spatial domain.

The images that constitute the input to a computer vision system are, however, also subject to perspective distortions.

In practice, affine invariant interest points can be obtained by applying affine shape adaptation where the shape of the smoothing kernel is iteratively warped to match the local image structure around the interest point or equivalently a local image patch is iteratively warped while the shape of the smoothing kernel remains rotationally symmetric (Lindeberg 1993, 2008; Lindeberg and Garding 1997; Mikolajzcyk and Schmid 2004).

, the horizontal and vertical directions are checked first to see if it is worth proceeding with the complete computation of

Building short decision trees for this problem results in the most computationally efficient feature detectors available.

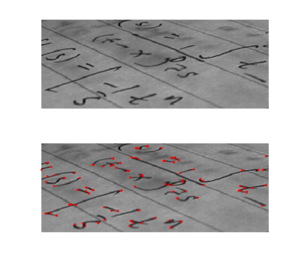

The first corner detection algorithm based on the AST is FAST (features from accelerated segment test).

Trujillo and Olague[30] introduced a method by which genetic programming is used to automatically synthesize image operators that can detect interest points.

The terminal and function sets contain primitive operations that are common in many previously proposed man-made designs.

Fitness measures the stability of each operator through the repeatability rate, and promotes a uniform dispersion of detected points across the image plane.

The performance of the evolved operators has been confirmed experimentally using training and testing sequences of progressively transformed images.

will perfectly match the spatial extent and the temporal duration of the blob, with scale selection performed by detecting spatio-temporal scale-space extrema of the differential expression.