Differential of a function

are considered to be very small (infinitesimal), and this interpretation is made rigorous in non-standard analysis.

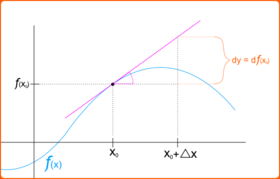

The differential was first introduced via an intuitive or heuristic definition by Isaac Newton and furthered by Gottfried Leibniz, who thought of the differential dy as an infinitely small (or infinitesimal) change in the value y of the function, corresponding to an infinitely small change dx in the function's argument x.

For that reason, the instantaneous rate of change of y with respect to x, which is the value of the derivative of the function, is denoted by the fraction

The use of infinitesimals in this form was widely criticized, for instance by the famous pamphlet The Analyst by Bishop Berkeley.

Augustin-Louis Cauchy (1823) defined the differential without appeal to the atomism of Leibniz's infinitesimals.

are simply new variables taking finite real values,[3] not fixed infinitesimals as they had been for Leibniz.

Cauchy's overall conceptual approach to differentials remains the standard one in modern analytical treatments,[5] although the final word on rigor, a fully modern notion of the limit, was ultimately due to Karl Weierstrass.

[6] In physical treatments, such as those applied to the theory of thermodynamics, the infinitesimal view still prevails.

Courant & John (1999, p. 184) reconcile the physical use of infinitesimal differentials with the mathematical impossibility of them as follows.

The differentials represent finite non-zero values that are smaller than the degree of accuracy required for the particular purpose for which they are intended.

In real analysis, it is more desirable to deal directly with the differential as the principal part of the increment of a function.

This approach allows the differential (as a linear map) to be developed for a variety of more sophisticated spaces, ultimately giving rise to such notions as the Fréchet or Gateaux derivative.

This notion of differential is broadly applicable when a linear approximation to a function is sought, in which the value of the increment

The absolute values of the component errors are used, because after simple computation, the derivative may have a negative sign.

This additional factor tends to make the error smaller, as ln b is not as large as a bare b. Higher-order differentials of a function y = f(x) of a single variable x can be defined via:[8]

Similar considerations apply to defining higher order differentials of functions of several variables.

Because of this notational awkwardness, the use of higher order differentials was roundly criticized by Hadamard (1935), who concluded: Enfin, que signifie ou que représente l'égalité

A mon avis, rien du tout.That is: Finally, what is meant, or represented, by the equality [...]?

In spite of this skepticism, higher order differentials did emerge as an important tool in analysis.

[10] In these contexts, the n-th order differential of the function f applied to an increment Δx is defined by

This definition makes sense as well if f is a function of several variables (for simplicity taken here as a vector argument).

Then the n-th differential defined in this way is a homogeneous function of degree n in the vector increment Δx.

The higher order Gateaux derivative generalizes these considerations to infinite dimensional spaces.

In addition, various forms of the chain rule hold, in increasing level of generality:[12] A consistent notion of differential can be developed for a function f : Rn → Rm between two Euclidean spaces.

This is precisely the Fréchet derivative, and the same construction can be made to work for a function between any Banach spaces.

which is the approach already taken for defining higher order differentials (and is most nearly the definition set forth by Cauchy).

This yields yet another refinement of the notion of differential: that it should be a linear function of a kinematic velocity.

Although the notion of having an infinitesimal increment dx is not well-defined in modern mathematical analysis, a variety of techniques exist for defining the infinitesimal differential so that the differential of a function can be handled in a manner that does not clash with the Leibniz notation.

Suppose that the variable x represents the outcome of an experiment and y is the result of a numerical computation applied to x.

If the x is known to within Δx of its true value, then Taylor's theorem gives the following estimate on the error Δy in the computation of y: