Global catastrophic risk

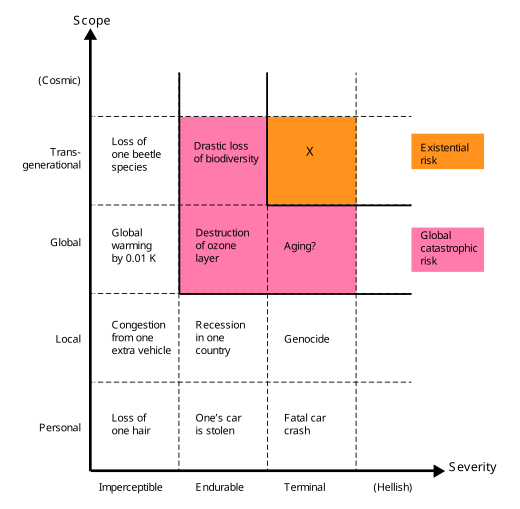

Similarly, in Catastrophe: Risk and Response, Richard Posner singles out and groups together events that bring about "utter overthrow or ruin" on a global, rather than a "local or regional" scale.

Posner highlights such events as worthy of special attention on cost–benefit grounds because they could directly or indirectly jeopardize the survival of the human race as a whole.

[18] A disaster severe enough to cause the permanent, irreversible collapse of human civilisation would constitute an existential catastrophe, even if it fell short of extinction.

[18] Psychologist Steven Pinker has called existential risk a "useless category" that can distract from threats he considers real and solvable, such as climate change and nuclear war.

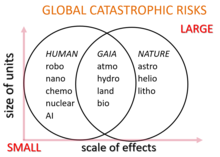

Examples of non-anthropogenic risks are an asteroid or comet impact event, a supervolcanic eruption, a natural pandemic, a lethal gamma-ray burst, a geomagnetic storm from a coronal mass ejection destroying electronic equipment, natural long-term climate change, hostile extraterrestrial life, or the Sun transforming into a red giant star and engulfing the Earth billions of years in the future.

Technological risks include the creation of artificial intelligence misaligned with human goals, biotechnology, and nanotechnology.

Insufficient or malign global governance creates risks in the social and political domain, such as global war and nuclear holocaust,[24] biological warfare and bioterrorism using genetically modified organisms, cyberwarfare and cyberterrorism destroying critical infrastructure like the electrical grid, or radiological warfare using weapons such as large cobalt bombs.

Other global catastrophic risks include climate change, environmental degradation, extinction of species, famine as a result of non-equitable resource distribution, human overpopulation or underpopulation, crop failures, and non-sustainable agriculture.

Another challenge is the general difficulty of accurately predicting the future over long timescales, especially for anthropogenic risks which depend on complex human political, economic and social systems.

[18] Therefore, existential risks pose unique challenges to prediction, even more than other long-term events, because of observation selection effects.

[30] Eliezer Yudkowsky theorizes that scope neglect plays a role in public perception of existential risks:[32][33] Substantially larger numbers, such as 500 million deaths, and especially qualitatively different scenarios such as the extinction of the entire human species, seem to trigger a different mode of thinking... People who would never dream of hurting a child hear of existential risk, and say, "Well, maybe the human species doesn't really deserve to survive".All past predictions of human extinction have proven to be false.

[34] Sociobiologist E. O. Wilson argued that: "The reason for this myopic fog, evolutionary biologists contend, is that it was actually advantageous during all but the last few millennia of the two million years of existence of the genus Homo... A premium was placed on close attention to the near future and early reproduction, and little else.

Nick Bostrom states that more research has been done on Star Trek, snowboarding, or dung beetles than on existential risks.

[38] Some scholars propose the establishment on Earth of one or more self-sufficient, remote, permanently occupied settlements specifically created for the purpose of surviving a global disaster.

[39][40][41] Economist Robin Hanson argues that a refuge permanently housing as few as 100 people would significantly improve the chances of human survival during a range of global catastrophes.

[45][46] More speculatively, if society continues to function and if the biosphere remains habitable, calorie needs for the present human population might in theory be met during an extended absence of sunlight, given sufficient advance planning.

Conjectured solutions include growing mushrooms on the dead plant biomass left in the wake of the catastrophe, converting cellulose to sugar, or feeding natural gas to methane-digesting bacteria.

Astrophysicist Stephen Hawking advocated colonizing other planets within the Solar System once technology progresses sufficiently, in order to improve the chance of human survival from planet-wide events such as global thermonuclear war.

1945) is one of the oldest global risk organizations, founded after the public became alarmed by the potential of atomic warfare in the aftermath of WWII.

[58][59] Beginning after 2000, a growing number of scientists, philosophers and tech billionaires created organizations devoted to studying global risks both inside and outside of academia.

2000), which aims to reduce the risk of a catastrophe caused by artificial intelligence,[61] with donors including Peter Thiel and Jed McCaleb.

GCRI does research and policy work across various risks, including artificial intelligence, nuclear war, climate change, and asteroid impacts.

2012), based in Stockholm and founded by Laszlo Szombatfalvy, releases a yearly report on the state of global risks.

2012) is a Cambridge University-based organization which studies four major technological risks: artificial intelligence, biotechnology, global warming and warfare.

[6] All are man-made risks, as Huw Price explained to the AFP news agency, "It seems a reasonable prediction that some time in this or the next century intelligence will escape from the constraints of biology".

[78] The Lawrence Livermore National Laboratory has a division called the Global Security Principal Directorate which researches on behalf of the government issues such as bio-security and counter-terrorism.