Impact factor

While frequently used by universities and funding bodies to decide on promotion and research proposals, it has been criticised for distorting good scientific practices.

[1][2][3] The impact factor was devised by Eugene Garfield, the founder of the Institute for Scientific Information (ISI) in Philadelphia.

Hence, the commonly used "JCR Impact Factor" is a proprietary value, which is defined and calculated by ISI and can not be verified by external users.

[14][15] While originally invented as a tool to help university librarians to decide which journals to purchase, the impact factor soon became used as a measure for judging academic success.

[18] Despite this warning, the use of the JIF has evolved, playing a key role in the process of assessing individual researchers, their job applications and their funding proposals.

In 2005, The Journal of Cell Biology noted that: Impact factor data ... have a strong influence on the scientific community, affecting decisions on where to publish, whom to promote or hire, the success of grant applications, and even salary bonuses.

It concluded that 40% of universities focused on academic research specifically mentioned the JIF as part of such review, promotion, and tenure processes.

[20] And a 2017 study of how researchers in the life sciences behave concluded that "everyday decision-making practices as highly governed by pressures to publish in high-impact journals".

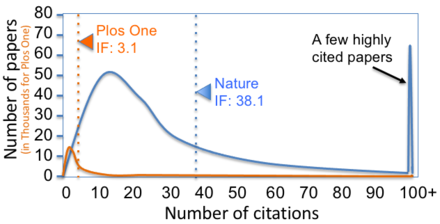

"[21] Numerous critiques have been made regarding the use of impact factors, both in terms of its statistical validity and also of its implications for how science is carried out and assessed.

[27] This can lead to a narrow focus on publishing in top-tier journals, potentially compromising the diversity of research topics and methodologies.

Further criticisms argue that emphasis on impact factor results from the negative influence of neoliberal politics on academia.

Some of these arguments demand not just replacement of the impact factor with more sophisticated metrics but also discussion on the social value of research assessment and the growing precariousness of scientific careers in higher education.

[41] Items considered to be uncitable—and thus are not incorporated in impact factor calculations—can, if cited, still enter into the numerator part of the equation despite the ease with which such citations could be excluded.

[50][2][51] By 2010, national and international research funding institutions were already starting to point out that numerical indicators such as the JIF should not be considered as a measure of quality.

[55][56][57] Empirical evidence shows that the misuse of the JIF—and journal ranking metrics in general—has a number of negative consequences for the scholarly communication system.

These include gaps between the reach of a journal and the quality of its individual papers[25] and insufficient coverage of social sciences and humanities as well as research outputs from across Latin America, Africa, and South-East Asia.

[58] Additional drawbacks include the marginalization of research in vernacular languages and on locally relevant topics and inducement to unethical authorship and citation practices.

Using journal prestige and the JIF to cultivate a competition regime in academia has been shown to have deleterious effects on research quality.

Plan S calls for a broader adoption and implementation of such initiatives alongside fundamental changes in the scholarly communication system.

[60][61][62] JIFs are still regularly used to evaluate research in many countries, which is a problem since a number of issues remain around the opacity of the metric and the fact that it is often negotiated by publishers.

[63][64][19] Results of an impact factor can change dramatically depending on which items are considered as "citable" and therefore included in the denominator.

[66] Publishers routinely discuss with Clarivate how to improve the "accuracy" of their journals' impact factor and therefore get higher scores.

[41][25] Such discussions routinely produce "negotiated values" which result in dramatic changes in the observed scores for dozens of journals, sometimes after unrelated events like the purchase by one of the larger publishers.

[70] Furthermore, the strength of the relationship between impact factors of journals and the citation rates of the papers therein has been steadily decreasing since articles began to be available digitally.

It indicated that by querying open access or partly open-access databases, like Google Scholar, ResearchGate, and Scopus, it is possible to calculate approximate impact factors without the need to purchase Web of Science / JCR.

[78] Given the growing criticism and its widespread usage as a means of research assessment, organisations and institutions have begun to take steps to move away from the journal impact factor.

[87] Nature magazine criticised the over-reliance on JIF, pointing not just to its statistical flaws but to negative effects on science: "The resulting pressures and disappointments are nothing but demoralizing, and in badly run labs can encourage sloppy research that, for example, fails to test assumptions thoroughly or to take all the data into account before submitting big claims.

[90][91] This followed a 2018 decision by the main Dutch funding body for research, NWO, to remove all references to journal impact factors and the h-index in all call texts and application forms.

An open letter signed by over 150 Dutch academics argued that, while imperfect, the JIF is still useful, and that omitting it "will lead to randomness and a compromising of scientific quality".

As with the impact factor, there are some nuances to this: for example, Clarivate excludes certain article types (such as news items, correspondence, and errata) from the denominator.