Direct Rendering Manager

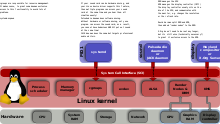

The Direct Rendering Manager (DRM) is a subsystem of the Linux kernel responsible for interfacing with GPUs of modern video cards.

DRM exposes an API that user-space programs can use to send commands and data to the GPU and perform operations such as configuring the mode setting of the display.

DRM was first developed as the kernel-space component of the X Server Direct Rendering Infrastructure,[1] but since then it has been used by other graphic stack alternatives such as Wayland and standalone applications and libraries such as SDL2 and Kodi.

The Linux kernel already had an API called fbdev, used to manage the framebuffer of a graphics adapter,[2] but it couldn't be used to handle the needs of modern 3D-accelerated GPU-based video hardware.

Programs wishing to use the GPU send requests to DRM, which acts as an arbitrator and takes care to avoid possible conflicts.

The scope of DRM has been expanded over the years to cover more functionality previously handled by user-space programs, such as framebuffer managing and mode setting, memory-sharing objects and memory synchronization.

[10] The use of libdrm not only avoids exposing the kernel interface directly to applications, but presents the usual advantages of reusing and sharing code between programs.

[8] When a specific DRM driver provides an enhanced API, user-space libdrm is also extended by an extra library libdrm-driver that can be used by user space to interface with the additional ioctls.

There are several operations (ioctls) in the DRM API that either for security purposes or for concurrency issues must be restricted to be used by a single user-space process per device.

When a user-space program needs a chunk of video memory (to store a framebuffer, texture or any other data required by the GPU[15]), it requests the allocation to the DRM driver using the GEM API.

[5] When a user-space graphics application wants access to a certain buffer object (usually to fill it with content), TTM may require relocating it to a type of memory addressable by the CPU.

[14][12]: 17 For the development of PRIME two new ioctls were added to the DRM API, one to convert a local GEM handle to a DMA-BUF file descriptor and another for the exact opposite operation.

In early days, the user space programs that wanted to use the graphical framebuffer were also responsible for providing the mode-setting operations,[3] and therefore they needed to run with privileged access to the video hardware.

In Unix-type operating systems, the X Server was the most prominent example, and its mode-setting implementation lived in the DDX driver for each specific type of video card.

[42] The user space mode setting approach also caused other issues:[43][42] To address these problems, the mode-setting code was moved to a single place inside the kernel, specifically to the existing DRM module.

The most immediate is of course the removal of duplicate mode-setting code, from both the kernel (Linux console, fbdev) and user space (X Server DDX drivers).

[42][43] By providing centralized mode management, KMS solves the flickering issues while changing between console and X, and also between different instances of X (fast user switching).

[45] For example, the mode recovery after a suspend/resume process simplifies a lot by being managed by the kernel itself, and incidentally improves security (no more user space tools requiring root permissions).

[56][9] Clients that use a direct rendering model and applications that want to take advantage of the computing facilities of a GPU, can do it without requiring additional privileges by simply opening any existing render node and dispatching GPU operations using the limited subset of the DRM API supported by those nodes—provided they have file system permissions to open the device file.

[9][56] The Linux DRM subsystem includes free and open-source drivers to support hardware from the 3 main manufacturers of GPUs for desktop computers (AMD, NVIDIA and Intel), as well as from a growing number of mobile GPU and System on a chip (SoC) integrators.

[128] In 1999, while developing DRI for XFree86, Precision Insight created the first version of DRM for the 3dfx video cards, as a Linux kernel patch included within the Mesa source code.

[42] In December 2007 Jerome Glisse started to add the native mode-setting code for ATI cards to the radeon DRM driver.

The first attempt was the Translation Table Maps (TTM) memory manager, developed by Thomas Hellstrom (Tungsten Graphics) in collaboration with Emma Anholt (Intel) and Dave Airlie (Red Hat).

[5] TTM was proposed for inclusion into mainline kernel 2.6.25 in November 2007,[5] and again in May 2008, but was ditched in favor of a new approach called Graphics Execution Manager (GEM).

[142] With memory management in place to handle buffer objects, DRM developers could finally add to the kernel the already finished API and code to do mode setting.

[154] In early 2011, during the Linux 2.6.39 release cycle, the so-called dumb buffers—a hardware-independent non-accelerated way to handle simple buffers suitable for use as framebuffers—were added to the KMS API.

[155][156] The goal was to reduce the complexity of applications such as Plymouth that don't need to use special accelerated operations provided by driver-specific ioctls.

[7] Airlie resumed his work on PRIME in late 2011, but based on the new DMA-BUF buffer sharing mechanism introduced by Linux kernel 3.3.

Therefore, the main users of DRM were DRI clients that link to the hardware-accelerated OpenGL implementation that lives in the Mesa 3D library, as well as the X Server itself.

[196] In 2015, version 358.09 (beta) of the proprietary Nvidia GeForce driver received support for the DRM mode-setting interface implemented as a new kernel blob called nvidia-modeset.ko.