Kernel (operating system)

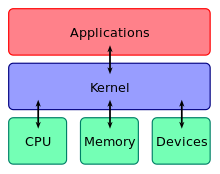

It handles the rest of startup as well as memory, peripherals, and input/output (I/O) requests from software, translating them into data-processing instructions for the central processing unit.

In contrast, application programs such as browsers, word processors, or audio or video players use a separate area of memory, user space.

Monolithic kernels run entirely in a single address space with the CPU executing in supervisor mode, mainly for speed.

If memory isolation is in use, it is impossible for a user process to call the kernel directly, because that would be a violation of the processor's access control rules.

[5] The mechanisms or policies provided by the kernel can be classified according to several criteria, including: static (enforced at compile time) or dynamic (enforced at run time); pre-emptive or post-detection; according to the protection principles they satisfy (e.g., Denning[8][9]); whether they are hardware supported or language based; whether they are more an open mechanism or a binding policy; and many more.

In this approach, each protected object must reside in an address space that the application does not have access to; the kernel also maintains a list of capabilities in such memory.

[11][16][17][18][19] One approach is to use firmware and kernel support for fault tolerance (see above), and build the security policy for malicious behavior on top of that (adding features such as cryptography mechanisms where necessary), delegating some responsibility to the compiler.

The processor monitors the execution and stops a program that violates a rule, such as a user process that tries to write to kernel memory.

[25] A number of other approaches (either lower- or higher-level) are available as well, with many modern kernels providing support for systems such as shared memory and remote procedure calls.

[5][25] The failure to properly fulfill this separation is one of the major causes of the lack of substantial innovation in existing operating systems,[5] a problem common in computer architecture.

[33] This link between monolithic design and "privileged mode" can be reconducted to the key issue of mechanism-policy separation;[5] in fact the "privileged mode" architectural approach melds together the protection mechanism with the security policies, while the major alternative architectural approach, capability-based addressing, clearly distinguishes between the two, leading naturally to a microkernel design.

Every part which is to be accessed by most programs which cannot be put in a library is in the kernel space: Device drivers, scheduler, memory handling, file systems, and network stacks.

A monolithic kernel, while initially loaded with subsystems that may not be needed, can be tuned to a point where it is as fast as or faster than the one that was specifically designed for the hardware, although more relevant in a general sense.

In fact, there are some versions that are small enough to fit together with a large number of utilities and other programs on a single floppy disk and still provide a fully functional operating system (one of the most popular of which is muLinux).

These types of kernels consist of the core functions of the operating system and the device drivers with the ability to load modules at runtime.

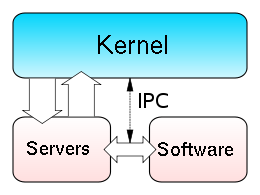

The microkernel approach consists of defining a simple abstraction over the hardware, with a set of primitives or system calls to implement minimal OS services such as memory management, multitasking, and inter-process communication.

Many critical parts are now running in user space: The complete scheduler, memory handling, file systems, and network stacks.

In the microkernel, only the most fundamental of tasks are performed such as being able to access some (not necessarily all) of the hardware, manage memory and coordinate message passing between the processes.

These types of kernels normally provide only the minimal services such as defining memory address spaces, inter-process communication (IPC) and the process management.

[citation needed] As a result, the design of Linux as a monolithic kernel rather than a microkernel was the topic of a famous debate between Linus Torvalds and Andrew Tanenbaum.

[36] The monolithic model tends to be more efficient[37] through the use of shared kernel memory, rather than the slower IPC system of microkernel designs, which is typically based on message passing.

Therefore it remained to be studied if the solution to build an efficient microkernel was, unlike previous attempts, to apply the correct construction techniques.

When a kernel module is loaded, it accesses the monolithic portion's memory space by adding to it what it needs, therefore, opening the doorway to possible pollution.

Some of the disadvantages of the modular approach are: A nanokernel delegates virtually all services – including even the most basic ones like interrupt controllers or the timer – to device drivers to make the kernel memory requirement even smaller than a traditional microkernel.

This comes down to every user writing their own rest-of-the kernel from near scratch, which is a very-risky, complex and quite a daunting assignment - particularly in a time-constrained production-oriented environment, which is why exokernels have never caught on.

One of the major developments during this era was time-sharing, whereby a number of users would get small slices of computer time, at a rate at which it appeared they were each connected to their own, slower, machine.

During the design phase of Unix, programmers decided to model every high-level device as a file, because they believed the purpose of computation was data transformation.

Over the years the computing model changed, and Unix's treatment of everything as a file or byte stream no longer was as universally applicable as it was before.

Microsoft also developed Windows NT, an operating system with a very similar interface, but intended for high-end and business users.

In the 1970s, IBM further abstracted the supervisor state from the hardware, resulting in a hypervisor that enabled full virtualization, i.e. the capacity to run multiple operating systems on the same machine totally independently from each other.