Load balancing (computing)

Perfect knowledge of the execution time of each of the tasks allows to reach an optimal load distribution (see algorithm of prefix sum).

First of all, in the fortunate scenario of having tasks of relatively homogeneous size, it is possible to consider that each of them will require approximately the average execution time.

Depending on the previous execution time for similar metadata, it is possible to make inferences for a future task based on statistics.

Therefore, static load balancing aims to associate a known set of tasks with the available processors in order to minimize a certain performance function.

The advantage of static algorithms is that they are easy to set up and extremely efficient in the case of fairly regular tasks (such as processing HTTP requests from a website).

While these algorithms are much more complicated to design, they can produce excellent results, in particular, when the execution time varies greatly from one task to another.

Dynamic load balancing architecture can be more modular since it is not mandatory to have a specific node dedicated to the distribution of work.

[3] Obviously, a load balancing algorithm that requires too much communication in order to reach its decisions runs the risk of slowing down the resolution of the overall problem.

In the context of algorithms that run over the very long term (servers, cloud...), the computer architecture evolves over time.

An extremely important parameter of a load balancing algorithm is therefore its ability to adapt to scalable hardware architecture.

The efficiency of such an algorithm is close to the prefix sum when the job cutting and communication time is not too high compared to the work to be done.

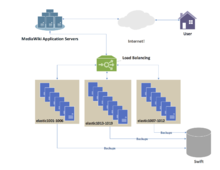

Commonly load-balanced systems include popular web sites, large Internet Relay Chat networks, high-bandwidth File Transfer Protocol (FTP) sites, Network News Transfer Protocol (NNTP) servers, Domain Name System (DNS) servers, and databases.

Round-robin DNS is an alternate method of load balancing that does not require a dedicated software or hardware node.

Furthermore, the quickest DNS response to the resolver is nearly always the one from the network's closest server, ensuring geo-sensitive load-balancing [citation needed].

Usually, load balancers are implemented in high-availability pairs which may also replicate session persistence data if required by the specific application.

[13] Certain applications are programmed with immunity to this problem, by offsetting the load balancing point over differential sharing platforms beyond the defined network.

[14] Numerous scheduling algorithms, also called load-balancing methods, are used by load balancers to determine which back-end server to send a request to.

A significant downside to this technique is its lack of automatic failover: if a backend server goes down, its per-session information becomes inaccessible, and any sessions depending on it are lost.

The random assignment method also requires that clients maintain some state, which can be a problem, for example when a web browser has disabled the storage of cookies.

Storing session data on the client is generally the preferred solution: then the load balancer is free to pick any backend server to handle a request.

However, this method of state-data handling is poorly suited to some complex business logic scenarios, where session state payload is big and recomputing it with every request on a server is not feasible.

The fundamental feature of a load balancer is to be able to distribute incoming requests over a number of backend servers in the cluster according to a scheduling algorithm.

TRILL (Transparent Interconnection of Lots of Links) facilitates an Ethernet to have an arbitrary topology, and enables per flow pair-wise load splitting by way of Dijkstra's algorithm, without configuration and user intervention.

SPB allows all links to be active through multiple equal-cost paths, provides faster convergence times to reduce downtime, and simplifies the use of load balancing in mesh network topologies (partially connected and/or fully connected) by allowing traffic to load share across all paths of a network.

[23][24] SPB is designed to virtually eliminate human error during configuration and preserves the plug-and-play nature that established Ethernet as the de facto protocol at Layer 2.

[26] Load balancing is widely used in data center networks to distribute traffic across many existing paths between any two servers.

[28] Increasingly, load balancing techniques are being used to manage high-volume data ingestion pipelines that feed artificial intelligence training and inference systems—sometimes referred to as “AI factories.” These AI-driven environments require continuous processing of vast amounts of structured and unstructured data, placing heavy demands on networking, storage, and computational resources.

[29] To maintain the necessary high throughput and low latency, organizations commonly deploy load balancing tools capable of advanced TCP optimizations, connection pooling, and adaptive scheduling.

Such features help distribute incoming data requests evenly across servers or nodes, prevent congestion, and ensure that compute resources remain efficiently utilized.

By routing data locally (on-premises) or across private clouds, load balancers allow AI workflows to avoid public-cloud bandwidth limits, reduce transit costs, and maintain compliance with regulatory standards.