Pixel

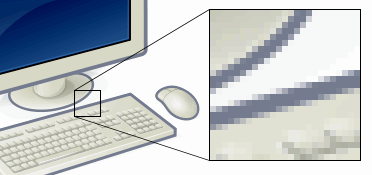

Software on early consumer computers was necessarily rendered at a low resolution, with large pixels visible to the naked eye; graphics made under these limitations may be called pixel art, especially in reference to video games.

[6] The word "pixel" was first published in 1965 by Frederic C. Billingsley of JPL, to describe the picture elements of scanned images from space probes to the Moon and Mars.

[7] Billingsley had learned the word from Keith E. McFarland, at the Link Division of General Precision in Palo Alto, who in turn said he did not know where it originated.

According to various etymologies, the earliest publication of the term picture element itself was in Wireless World magazine in 1927,[8] though it had been used earlier in various U.S. patents filed as early as 1911.

This list is not exhaustive and, depending on context, synonyms include pel, sample, byte, bit, dot, and spot.

The measures "dots per inch" (dpi) and "pixels per inch" (ppi) are sometimes used interchangeably, but have distinct meanings, especially for printer devices, where dpi is a measure of the printer's density of dot (e.g. ink droplet) placement.

The word raster originates from television scanning patterns, and has been widely used to describe similar halftone printing and storage techniques.

On some systems, 32-bit depth is available: this means that each 24-bit pixel has an extra 8 bits to describe its opacity (for purposes of combining with another image).

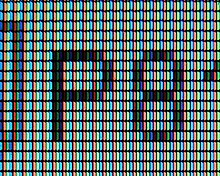

Therefore, the pixel grid is divided into single-color regions that contribute to the displayed or sensed color when viewed at a distance.

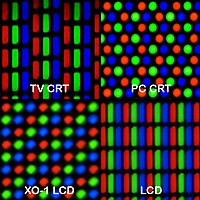

While CRT displays use red-green-blue-masked phosphor areas, dictated by a mesh grid called the shadow mask, it would require a difficult calibration step to be aligned with the displayed pixel raster, and so CRTs do not use subpixel rendering.

As screens are viewed at difference distances (consider a phone, a computer display, and a TV), the desired length (a "reference pixel") is scaled relative to a reference viewing distance (28 inches (71 cm) in CSS).

The final "pixel" obtained after these two steps becomes the "anchor" to which all other absolute measurements (e.g. the "centimeter") are based on.

Digital cameras use photosensitive electronics, either charge-coupled device (CCD) or complementary metal–oxide–semiconductor (CMOS) image sensors, consisting of a large number of single sensor elements, each of which records a measured intensity level.

In most digital cameras, the sensor array is covered with a patterned color filter mosaic having red, green, and blue regions in the Bayer filter arrangement so that each sensor element can record the intensity of a single primary color of light.

The camera interpolates the color information of neighboring sensor elements, through a process called demosaicing, to create the final image.

These sensor elements are often called "pixels", even though they only record one channel (only red or green or blue) of the final color image.

The new P-MPix claims to be a more accurate and relevant value for photographers to consider when weighing up camera sharpness.

[22] As of mid-2013, the Sigma 35 mm f/1.4 DG HSM lens mounted on a Nikon D800 has the highest measured P-MPix.

[23] In August 2019, Xiaomi released the Redmi Note 8 Pro as the world's first smartphone with 64 MP camera.