Metascience

These measures include the pre-registration of scientific studies and clinical trials as well as the founding of organizations such as CONSORT and the EQUATOR Network that issue guidelines for methodology and reporting.

The first Peer Review Congress, held in 1989 in Chicago, Illinois, is considered a pivotal moment in the founding of journalology as a distinct field.

[1] Critics argue that perverse incentives have created a publish-or-perish environment in academia which promotes the production of junk science, low quality research, and false positives.

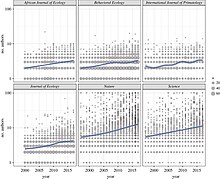

[50] Studies suggest that "metrics used to measure academic success, such as the number of publications, citation number, and impact factor, have not changed for decades" and have to some degrees "ceased" to be good measures,[44][19] leading to issues such as "overproduction, unnecessary fragmentations, overselling, predatory journals (pay and publish), clever plagiarism, and deliberate obfuscation of scientific results so as to sell and oversell".

[52] This can be used to convert unweighted citation networks to a weighted one and then for importance assessment, deriving "impact metrics for the various entities involved, like the publications, authors etc"[53] as well as, among other tools, for search engine- and recommendation systems.

Other incentives to govern science and related processes, including via metascience-based reforms, may include ensuring accountability to the public (in terms of e.g. accessibility of, especially publicly-funded, research or of it addressing various research topics of public interest in serious manners), increasing the qualified productive scientific workforce, improving the efficiency of science to improve problem-solving in general, and facilitating that unambiguous societal needs based on solid scientific evidence – such as about human physiology – are adequately prioritized and addressed.

[61] A study suggests that "[i]f peer review is maintained as the primary mechanism of arbitration in the competitive selection of research reports and funding, then the scientific community needs to make sure it is not arbitrary".

[62] It has been argued that "science has two fundamental attributes that underpin its value as a global public good: that knowledge claims and the evidence on which they are based are made openly available to scrutiny, and that the results of scientific research are communicated promptly and efficiently".

[64][65][66][67] A study found that the "main incentive academics are offered for using social media is amplification" and that it should be "moving towards an institutional culture that focuses more on how these [or such] platforms can facilitate real engagement with research".

Alternative metrics tools can be used not only for help in assessment (performance and impact)[61] and findability, but also aggregate many of the public discussions about a scientific paper in social media such as reddit, citations on Wikipedia, and reports about the study in the news media which can then in turn be analyzed in metascience or provided and used by related tools.

The specific procedures of established altmetrics are not transparent[70] and the used algorithms can not be customized or altered by the user as open source software can.

[80][81] Hotez suggests antiscience "has emerged as a dominant and highly lethal force, and one that threatens global security", and that there is a need for "new infrastructure" that mitigates it.

[63][85][86][87] Open access can save considerable amounts of financial resources, which could be used otherwise, and level the playing field for researchers in developing countries.

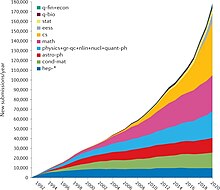

A study found segments with different growth rates appear related to phases of "economic (e.g., industrialization)" – money is considered as necessary input to the science system – "and/or political developments (e.g., Second World War)".

[61] (see above) Science maps could show main interrelated topics within a certain scientific domain, their change over time, and their key actors (researchers, institutions, journals).

They may help find factors determine the emergence of new scientific fields and the development of interdisciplinary areas and could be relevant for science policy purposes.

[110] Beyond visual maps, expert survey-based studies and similar approaches could identify understudied or neglected societally important areas, topic-level problems (such as stigma or dogma), or potential misprioritizations.

[98] Evidence synthesis can be applied to important and, notably, both relatively urgent and certain global challenges: "climate change, energy transitions, biodiversity loss, antimicrobial resistance, poverty eradication and so on".

For example, meta-analyses can quantify what is known and identify what is not yet known[72] and place "truly innovative and highly interdisciplinary ideas" into the context of established knowledge which may enhance their impact.

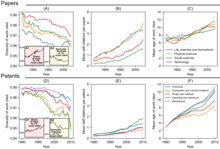

[61][19] Two metascientists reported that "structures fostering disruptive scholarship and focusing attention on novel ideas" could be important as in a growing scientific field citation flows disproportionately consolidate to already well-cited papers, possibly slowing and inhibiting canonical progress.

[117][118] A study concluded that to enhance impact of truly innovative and highly interdisciplinary novel ideas, they should be placed in the context of established knowledge.

[119][120] Highly productive partnerships are also a topic of research – e.g. "super-ties" of frequent co-authorship of two individuals who can complement skills, likely also the result of other factors such as mutual trust, conviction, commitment and fun.

Pregistration requires the submission of a registered report, which is then accepted for publication or rejected by a journal based on theoretical justification, experimental design, and the proposed statistical analysis.

Pre-registration of studies serves to prevent publication bias (e.g. not publishing negative results), reduce data dredging, and increase replicability.

Such can be called "applied metascience"[140][better source needed] and may seek to explore ways to increase quantity, quality and positive impact of research.

Medical and academic disputes are as ancient as antiquity and a study calls for research into "constructive and obsessive criticism" and into policies to "help strengthen social media into a vibrant forum for discussion, and not merely an arena for gladiator matches".

[167][168][169] For example, Lau et al.[167] analyzed 33 clinical trials (involving 36974 patients) evaluating the effectiveness of intravenous streptokinase for acute myocardial infarction.

A 2007 study by John Ioannidis found that it took an average of ten years for the medical community to stop referencing popular practices after their efficacy was unequivocally disproven.

[178][179] In response to concerns about publication bias and p-hacking, more than 140 psychology journals have adopted result-blind peer review, in which studies are pre-registered and published without regard for their outcome.

[citation needed] Richard Feynman noted that estimates of physical constants were closer to published values than would be expected by chance.

A study can be part of multiple fields [ clarification needed ] and lower numbers of papers is not necessarily detrimental [ 51 ] for fields.