Content moderation

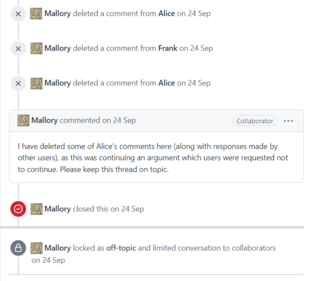

A group of people are chosen by the site's administrators (usually on a long-term basis) to act as delegates, enforcing the community rules on their behalf.

The social media platforms (e.g Facebook, Google) are largely based in the United States, Europe and China.

[5]: 79–81 While at one time this work may have been done by volunteers within the online community, for commercial websites this is largely achieved through outsourcing the task to specialized companies, often in low-wage areas such as India and the Philippines.

In the late 1980s and early 1990s, tech companies began to outsource jobs to foreign countries that had an educated workforce but were willing to work for cheap.

[12] Some large companies such as Facebook offer psychological support[12] and increasingly rely on the use of artificial intelligence to sort out the most graphic and inappropriate content, but critics claim that it is insufficient.

[4] In February 2019, an investigative report by The Verge described poor working conditions at Cognizant's office in Phoenix, Arizona.

[16] Cognizant employees tasked with content moderation for Facebook developed mental health issues, including post-traumatic stress disorder, as a result of exposure to graphic violence, hate speech, and conspiracy theories in the videos they were instructed to evaluate.

[28] Following the acquisition of Twitter by Elon Musk in October 2022, content rules have been weakened across the platform in an attempt to prioritize free speech.

Billions of people are currently making decisions on what to share, forward or give visibility to on a daily basis.

This type of moderation depends on users of a platform or site to report content that is inappropriate and breaches community standards.

In this process, when users are faced with an image or video they deem unfit, they can click the report button.