Multiplication algorithm

Using many parts can set the exponent arbitrarily close to 1, but the constant factor also grows, making it impractical.

Integer multiplication algorithms can also be used to multiply polynomials by means of the method of Kronecker substitution.

Some chips implement long multiplication, in hardware or in microcode, for various integer and floating-point word sizes.

A typical solution is to represent the number in a small base, b, such that, for example, 8b is a representable machine integer.

Most currently available microprocessors implement this or other similar algorithms (such as Booth encoding) for various integer and floating-point sizes in hardware multipliers or in microcode.

Such algorithms may be devised for speed, ease of calculation, or educational value, particularly when computers or multiplication tables are unavailable.

It has been a standard part of the national primary school mathematics curriculum in England and Wales since the late 1990s.

[3] Both factors are broken up ("partitioned") into their hundreds, tens and units parts, and the products of the parts are then calculated explicitly in a relatively simple multiplication-only stage, before these contributions are then totalled to give the final answer in a separate addition stage.

Its essence is the calculation of the simple multiplications separately, with all addition being left to the final gathering-up stage.

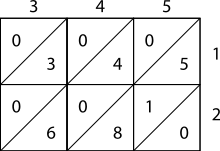

It requires the preparation of a lattice (a grid drawn on paper) which guides the calculation and separates all the multiplications from the additions.

Fibonacci described the operation as mental, using his right and left hands to carry the intermediate calculations.

It is found in Muhammad ibn Musa al-Khwarizmi's "Arithmetic", one of Leonardo's sources mentioned by Sigler, author of "Fibonacci's Liber Abaci", 2002.

[6] Its main advantages are that it can be taught quickly, requires no memorization, and can be performed using tokens, such as poker chips, if paper and pencil aren't available.

Describing the steps explicitly: The method works because multiplication is distributive, so: A more complicated example, using the figures from the earlier examples (23,958,233 and 5,830): This formula can in some cases be used, to make multiplication tasks easier to complete: In the case where

Below is a lookup table of quarter squares with the remainder discarded for the digits 0 through 18; this allows for the multiplication of numbers up to 9×9.

In prehistoric time, quarter square multiplication involved floor function; that some sources[7][8] attribute to Babylonian mathematics (2000–1600 BC).

Finally the difference of the two squares is formed and scaled by a factor of one fourth using yet another operational amplifier.

A line of research in theoretical computer science is about the number of single-bit arithmetic operations necessary to multiply two

Although using more and more parts can reduce the time spent on recursive multiplications further, the overhead from additions and digit management also grows.

[17] In 2007 the asymptotic complexity of integer multiplication was improved by the Swiss mathematician Martin Fürer of Pennsylvania State University to

Anindya De, Chandan Saha, Piyush Kurur and Ramprasad Saptharishi gave a similar algorithm using modular arithmetic in 2008 achieving the same running time.

In 2014, Harvey, Joris van der Hoeven and Lecerf[20] gave a new algorithm that achieves a running time of

In 2016, Covanov and Thomé proposed an integer multiplication algorithm based on a generalization of Fermat primes that conjecturally achieves a complexity bound of

[21] In 2018, Harvey and van der Hoeven used an approach based on the existence of short lattice vectors guaranteed by Minkowski's theorem to prove an unconditional complexity bound of

[22] In March 2019, David Harvey and Joris van der Hoeven announced their discovery of an O(n log n) multiplication algorithm.

[24] Because Schönhage and Strassen predicted that n log(n) is the "best possible" result, Harvey said: "... our work is expected to be the end of the road for this problem, although we don't know yet how to prove this rigorously.

Multiplication lies outside of AC0[p] for any prime p, meaning there is no family of constant-depth, polynomial (or even subexponential) size circuits using AND, OR, NOT, and MODp gates that can compute a product.

Or As observed by Peter Ungar in 1963, one can reduce the number of multiplications to three, using essentially the same computation as Karatsuba's algorithm.

Alternatively the Kronecker substitution technique may be used to convert the problem of multiplying polynomials into a single binary multiplication.

The quarters column is totaled and the result placed in the second workspace (a trivial move in this case).