Multithreading (computer architecture)

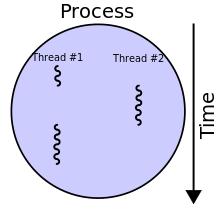

In computer architecture, multithreading is the ability of a central processing unit (CPU) (or a single core in a multi-core processor) to provide multiple threads of execution.

On the other hand, hand-tuned assembly language programs using MMX or AltiVec extensions and performing data prefetches (as a good video encoder might) do not suffer from cache misses or idle computing resources.

[2][3][4] The simplest type of multithreading occurs when one thread runs until it is blocked by an event that normally would create a long-latency stall.

For example: Conceptually, it is similar to cooperative multi-tasking used in real-time operating systems, in which tasks voluntarily give up execution time when they need to wait upon some type of event.

Additional hardware support for multithreading allows thread switching to be done in one CPU cycle, bringing performance improvements.

Many families of microcontrollers and embedded processors have multiple register banks to allow quick context switching for interrupts.

Conceptually, it is similar to preemptive multitasking used in operating systems; an analogy would be that the time slice given to each active thread is one CPU cycle.

Another area of research is what type of events should cause a thread switch: cache misses, inter-thread communication, DMA completion, etc.

If the multithreading scheme replicates all of the software-visible state, including privileged control registers and TLBs, then it enables virtual machines to be created for each thread.

On the other hand, if only user-mode state is saved, then less hardware is required, which would allow more threads to be active at one time for the same die area or cost.