Public-key cryptography

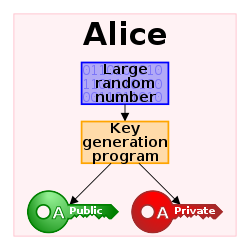

[1][2] Key pairs are generated with cryptographic algorithms based on mathematical problems termed one-way functions.

Public key algorithms are fundamental security primitives in modern cryptosystems, including applications and protocols that offer assurance of the confidentiality and authenticity of electronic communications and data storage.

This requirement is never trivial and very rapidly becomes unmanageable as the number of participants increases, or when secure channels are not available, or when, (as is sensible cryptographic practice), keys are frequently changed.

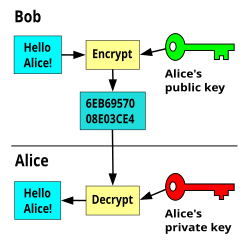

[9][10][11][12] Public-key encryption on its own also does not tell the recipient anything about who sent a message[8]: 283 [13][14]—it just conceals the content of the message.One important issue is confidence/proof that a particular public key is authentic, i.e. that it is correct and belongs to the person or entity claimed, and has not been tampered with or replaced by some (perhaps malicious) third party.

Non-repudiation systems use digital signatures to ensure that one party cannot successfully dispute its authorship of a document or communication.

Further applications built on this foundation include: digital cash, password-authenticated key agreement, time-stamping services and non-repudiation protocols.

Additionally, with the advent of quantum computing, many asymmetric key algorithms are considered vulnerable to attacks, and new quantum-resistant schemes are being developed to overcome the problem.

But other algorithms may inherently have much lower work factors, making resistance to a brute-force attack (e.g., from longer keys) irrelevant.

[18] As with all cryptographic functions, public-key implementations may be vulnerable to side-channel attacks that exploit information leakage to simplify the search for a secret key.

Encrypted messages and responses must, in all instances, be intercepted, decrypted, and re-encrypted by the attacker using the correct public keys for the different communication segments so as to avoid suspicion.

A hypothetical malicious staff member at an Internet service provider (ISP) might find a man-in-the-middle attack relatively straightforward.

[citation needed] In some advanced man-in-the-middle attacks, one side of the communication will see the original data while the other will receive a malicious variant.

Hence, man-in-the-middle attacks are only fully preventable when the communications infrastructure is physically controlled by one or both parties; such as via a wired route inside the sender's own building.

An attacker who penetrates an authority's servers and obtains its store of certificates and keys (public and private) would be able to spoof, masquerade, decrypt, and forge transactions without limit, assuming that they were able to place themselves in the communication stream.

This means that a third party could construct quite a detailed model of participants in a communication network, along with the subjects being discussed, even if the message body itself is hidden.

However, there has been a recent demonstration of messaging with encrypted headers, which obscures the identities of the sender and recipient, and significantly reduces the available metadata to a third party.

During the early history of cryptography, two parties would rely upon a key that they would exchange by means of a secure, but non-cryptographic, method such as a face-to-face meeting, or a trusted courier.

"[26] In 1970, James H. Ellis, a British cryptographer at the UK Government Communications Headquarters (GCHQ), conceived of the possibility of "non-secret encryption", (now called public key cryptography), but could see no way to implement it.

Only at the end of the evolution from Berners-Lee designing an open internet architecture for CERN, its adaptation and adoption for the Arpanet ... did public key cryptography realise its full potential.

[32] In 1977, a generalization of Cocks's scheme was independently invented by Ron Rivest, Adi Shamir and Leonard Adleman, all then at MIT.

The latter authors published their work in 1978 in Martin Gardner's Scientific American column, and the algorithm came to be known as RSA, from their initials.

Its security is connected to the extreme difficulty of factoring large integers, a problem for which there is no known efficient general technique.