Sampling (statistics)

Each observation measures one or more properties (such as weight, location, colour or mass) of independent objects or individuals.

[3] In the US, the 1936 Literary Digest prediction of a Republican win in the presidential election went badly awry, due to severe bias [1].

More than two million people responded to the study with their names obtained through magazine subscription lists and telephone directories.

It was not appreciated that these lists were heavily biased towards Republicans and the resulting sample, though very large, was deeply flawed.

Similar considerations arise when taking repeated measurements of properties of materials such as the electrical conductivity of copper.

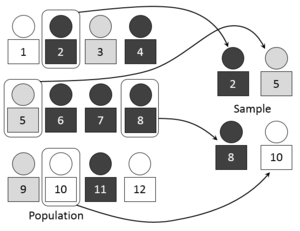

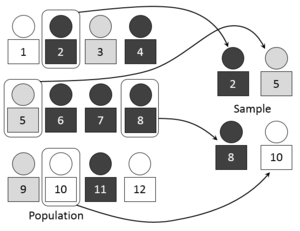

The combination of these traits makes it possible to produce unbiased estimates of population totals, by weighting sampled units according to their probability of selection.

These conditions give rise to exclusion bias, placing limits on how much information a sample can provide about the population.

For instance, a simple random sample of ten people from a given country will on average produce five men and five women, but any given trial is likely to over represent one sex and underrepresent the other.

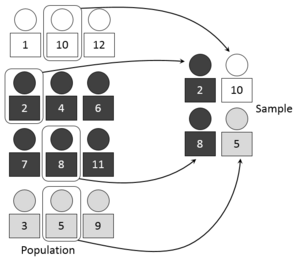

Systematic and stratified techniques attempt to overcome this problem by "using information about the population" to choose a more "representative" sample.

For example, researchers might be interested in examining whether cognitive ability as a predictor of job performance is equally applicable across racial groups.

For example, suppose we wish to sample people from a long street that starts in a poor area (house No.

Another drawback of systematic sampling is that even in scenarios where it is more accurate than SRS, its theoretical properties make it difficult to quantify that accuracy.

As described above, systematic sampling is an EPS method, because all elements have the same probability of selection (in the example given, one in ten).

[12] First, dividing the population into distinct, independent strata can enable researchers to draw inferences about specific subgroups that may be lost in a more generalized random sample.

Third, it is sometimes the case that data are more readily available for individual, pre-existing strata within a population than for the overall population; in such cases, using a stratified sampling approach may be more convenient than aggregating data across groups (though this may potentially be at odds with the previously noted importance of utilizing criterion-relevant strata).

Second, when examining multiple criteria, stratifying variables may be related to some, but not to others, further complicating the design, and potentially reducing the utility of the strata.

In addition to allowing for stratification on an ancillary variable, poststratification can be used to implement weighting, which can improve the precision of a sample's estimates.

However, this has the drawback of variable sample size, and different portions of the population may still be over- or under-represented due to chance variation in selections.

PPS sampling is commonly used for surveys of businesses, where element size varies greatly and auxiliary information is often available – for instance, a survey attempting to measure the number of guest-nights spent in hotels might use each hotel's number of rooms as an auxiliary variable.

This random element is its greatest weakness and quota versus probability has been a matter of controversy for several years.

The notion of minimax sampling is recently developed for a general class of classification rules, called class-wise smart classifiers.

[15] The target population for advertisements can be selected by characteristics like location, age, sex, income, occupation, education, or interests using tools provided by the social medium.

After following the link and completing the survey, the volunteer submits the data to be included in the sample population.

[16] This longitudinal sampling-method allows estimates of changes in the population, for example with regard to chronic illness to job stress to weekly food expenditures.

Panel sampling can also be used to inform researchers about within-person health changes due to age or to help explain changes in continuous dependent variables such as spousal interaction.

[17] There have been several proposed methods of analyzing panel data, including MANOVA, growth curves, and structural equation modeling with lagged effects.

This is often addressed by improving survey design, offering incentives, and conducting follow-up studies which make a repeated attempt to contact the unresponsive and to characterize their similarities and differences with the rest of the frame.

More generally, data should usually be weighted if the sample design does not give each individual an equal chance of being selected.

Similarly, households with more than one telephone line have a greater chance of being selected in a random digit dialing sample, and weights can adjust for this.

The textbook by Groves et alia provides an overview of survey methodology, including recent literature on questionnaire development (informed by cognitive psychology) : The other books focus on the statistical theory of survey sampling and require some knowledge of basic statistics, as discussed in the following textbooks: The elementary book by Scheaffer et alia uses quadratic equations from high-school algebra: More mathematical statistics is required for Lohr, for Särndal et alia, and for Cochran:[27] The historically important books by Deming and Kish remain valuable for insights for social scientists (particularly about the U.S. census and the Institute for Social Research at the University of Michigan):