Replication crisis

The replication crisis is frequently discussed in relation to psychology and medicine, where considerable efforts have been undertaken to reinvestigate classic results, to determine whether they are reliable, and if they turn out not to be, the reasons for the failure.

[45][46] In the social sciences, the blog Data Colada (whose three authors coined the term "p-hacking" in a 2014 paper) has been credited with contributing to the start of the replication crisis.

[51] Spellman also identifies reasons that the reiteration of these criticisms and concerns in recent years led to a full-blown crisis and challenges to the status quo.

According to Spellman, these factors, coupled with increasingly limited resources and misaligned incentives for doing scientific work, led to a crisis in psychology and other fields.

[5][99] In 2010, Fanelli (2010)[100] found that 91.5% of psychiatry/psychology studies confirmed the effects they were looking for, and concluded that the odds of this happening (a positive result) was around five times higher than in fields such as astronomy or geosciences.

A major cause of low reproducibility is the publication bias stemming from the fact that statistically non-significant results and seemingly unoriginal replications are rarely published.

Only a very small proportion of academic journals in psychology and neurosciences explicitly welcomed submissions of replication studies in their aim and scope or instructions to authors.

According to philosopher of science Felipe Romero, this tends to produce "misleading literature and biased meta-analytic studies",[27] and when publication bias is considered along with the fact that a majority of tested hypotheses might be false a priori, it is plausible that a considerable proportion of research findings might be false positives, as shown by metascientist John Ioannidis.

In the context of publication bias, this can mean adopting behaviors aimed at making results positive or statistically significant, often at the expense of their validity (see QRPs, section 4.3).

According to Ravetz, quality in science is maintained only when there is a community of scholars, linked by a set of shared norms and standards, who are willing and able to hold each other accountable.

[88][89] The aforementioned study of empirical findings in the Strategic Management Journal found that 70% of 88 articles could not be replicated due to a lack of sufficient information for data or procedures.

[131]Multiverse analysis, a method that makes inferences based on all plausible data-processing pipelines, provides a solution to the problem of analytical flexibility.

[140] According to Deakin University professor Tom Stanley and colleagues, one plausible reason studies fail to replicate is low statistical power.

[143] In light of these results, it is plausible that a major reason for widespread failures to replicate in several scientific fields might be very low statistical power on average.

If considered along sampling error, heterogeneity yields a standard deviation from one study to the next even larger than the median effect size of the 200 meta-analyses they investigated.

[157][158][159] New York University professor Jay Van Bavel and colleagues argue that a further reason findings are difficult to replicate is the sensitivity to context of certain psychological effects.

[160] Van Bavel and colleagues tested the influence of context sensitivity by reanalyzing the data of the widely cited Reproducibility Project carried out by the Open Science Collaboration.

[23][167] According to philosopher Alexander Bird, a possible reason for the low rates of replicability in certain scientific fields is that a majority of tested hypotheses are false a priori.

For example, the proportion of false positives increases to a value between 55.2% and 57.6% when calculated with the estimates of an average power between 34.1% and 36.4% for psychology studies, as provided by Stanley and colleagues in their analysis of 200 meta-analyses in the field.

[173] Research supporting this concern is sparse, but a nationally representative survey in Germany showed that more than 75% of Germans have not heard of replication failures in science.

[175][176][177][178] She called these unidentified "adversaries" names such as "methodological terrorist" and "self-appointed data police", saying that criticism of psychology should be expressed only in private or by contacting the journals.

[175] Columbia University statistician and political scientist Andrew Gelman responded to Fiske, saying that she had found herself willing to tolerate the "dead paradigm" of faulty statistics and had refused to retract publications even when errors were pointed out.

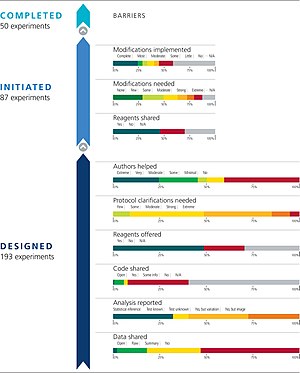

Methods of addressing the crisis include pre-registration of scientific studies and clinical trials as well as the founding of organizations such as CONSORT and the EQUATOR Network that issue guidelines for methodology and reporting.

Once the method and analysis plan is vetted through peer-review, publication of the findings is provisionally guaranteed, based on whether the authors follow the proposed protocol.

Psychologist Daniel Kahneman argued that, in psychology, the original authors should be involved in the replication effort because the published methods are often too vague.

The replication crisis has led to the formation and development of various large-scale and collaborative communities to pool their resources to address a single question across cultures, countries and disciplines.

Psychologist Marcus R. Munafò and Epidemiologist George Davey Smith argue, in a piece published by Nature, that research should emphasize triangulation, not just replication, to protect against flawed ideas.

Replication efforts should seek not just to support or question the original findings, but also to replace them with revised, stronger theories with greater explanatory power.

Reproducible and replicable findings was the best predictor of generalisability beyond historical and geographical contexts, indicating that for social sciences, results from a certain time period and place can meaningfully drive as to what is universally present in individuals.

[236] Online repositories where data, protocols, and findings can be stored and evaluated by the public seek to improve the integrity and reproducibility of research.