Generative grammar

Generative grammar is a research tradition in linguistics that aims to explain the cognitive basis of language by formulating and testing explicit models of humans' subconscious grammatical knowledge.

Generative linguists, or generativists (/ˈdʒɛnərətɪvɪsts/),[1] tend to share certain working assumptions such as the competence–performance distinction and the notion that some domain-specific aspects of grammar are partly innate in humans.

Generative grammar began in the late 1950s with the work of Noam Chomsky, having roots in earlier approaches such as structural linguistics.

The earliest version of Chomsky's model was called Transformational grammar, with subsequent iterations known as Government and binding theory and the Minimalist program.

What unites these approaches is the goal of uncovering the cognitive basis of language by formulating and testing explicit models of humans' subconscious grammatical knowledge.

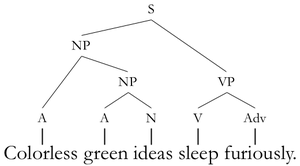

[7][8] Generative grammar proposes models of language consisting of explicit rule systems, which make testable falsifiable predictions.

[13] For example, generative theories generally provide competence-based explanations for why English speakers would judge the sentence in (1) as odd.

[22][23][24] The empirical basis of poverty of the stimulus arguments has been challenged by Geoffrey Pullum and others, leading to back-and-forth debate in the language acquisition literature.

One notable hypothesis proposed by Hagit Borer holds that the fundamental syntactic operations are universal and that all variation arises from different feature-specifications in the lexicon.

[21][29] In a 2002 paper, Noam Chomsky, Marc Hauser and W. Tecumseh Fitch proposed that universal grammar consists solely of the capacity for hierarchical phrase structure.

For example, research in phonology includes work on phonotactic rules which govern which phonemes can be combined, as well as those that determine the placement of stress, tone, and other suprasegmental elements.

[34] One notable approach is Fred Lerdahl and Ray Jackendoff's Generative theory of tonal music, which formalized and extended ideas from Schenkerian analysis.

[citation needed] After the Linguistics wars of the late 1960s and early 1970s, Chomsky developed a revised model of syntax called Government and binding theory, which eventually grew into Minimalism.

In the 1990s, this approach was largely replaced by Optimality theory, which was able to capture generalizations called conspiracies which needed to be stipulated in SPE phonology.

Subsequent work by Barbara Partee, Irene Heim, Tanya Reinhart, and others showed that the key insights of Montague Grammar could be incorporated into more syntactically plausible systems.