Symmetric matrix

Every square diagonal matrix is symmetric, since all off-diagonal elements are zero.

Similarly in characteristic different from 2, each diagonal element of a skew-symmetric matrix must be zero, since each is its own negative.

In linear algebra, a real symmetric matrix represents a self-adjoint operator[1] represented in an orthonormal basis over a real inner product space.

The corresponding object for a complex inner product space is a Hermitian matrix with complex-valued entries, which is equal to its conjugate transpose.

Therefore, in linear algebra over the complex numbers, it is often assumed that a symmetric matrix refers to one which has real-valued entries.

Symmetric matrices appear naturally in a variety of applications, and typical numerical linear algebra software makes special accommodations for them.

scalars (the number of entries on or above the main diagonal).

scalars (the number of entries above the main diagonal).

Since this definition is independent of the choice of basis, symmetry is a property that depends only on the linear operator A and a choice of inner product.

This characterization of symmetry is useful, for example, in differential geometry, for each tangent space to a manifold may be endowed with an inner product, giving rise to what is called a Riemannian manifold.

Another area where this formulation is used is in Hilbert spaces.

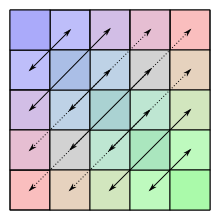

The finite-dimensional spectral theorem says that any symmetric matrix whose entries are real can be diagonalized by an orthogonal matrix.

real symmetric matrices that commute, then they can be simultaneously diagonalized by an orthogonal matrix:[2] there exists a basis of

(In fact, the eigenvalues are the entries in the diagonal matrix

Essentially, the property of being symmetric for real matrices corresponds to the property of being Hermitian for complex matrices.

is a real diagonal matrix with non-negative entries.

It was originally proved by Léon Autonne (1915) and Teiji Takagi (1925) and rediscovered with different proofs by several other mathematicians.

is Hermitian and positive semi-definite, so there is a unitary matrix

by a suitable diagonal unitary matrix (which preserves unitarity of

(Note, about the eigen-decomposition of a complex symmetric matrix

Using the Jordan normal form, one can prove that every square real matrix can be written as a product of two real symmetric matrices, and every square complex matrix can be written as a product of two complex symmetric matrices.

Singular matrices can also be factored, but not uniquely.

Cholesky decomposition states that every real positive-definite symmetric matrix

is a permutation matrix (arising from the need to pivot),

blocks, which is called Bunch–Kaufman decomposition [6] A general (complex) symmetric matrix may be defective and thus not be diagonalizable.

real variables (the continuity of the second derivative is not needed, despite common belief to the opposite[7]).

Because of the above spectral theorem, one can then say that every quadratic form, up to the choice of an orthonormal basis of

This is important partly because the second-order behavior of every smooth multi-variable function is described by the quadratic form belonging to the function's Hessian; this is a consequence of Taylor's theorem.

is said to be symmetrizable if there exists an invertible diagonal matrix

is symmetrizable if and only if the following conditions are met: Other types of symmetry or pattern in square matrices have special names; see for example: See also symmetry in mathematics.