Transmission Control Protocol

TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network.

Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the transport layer of the TCP/IP suite.

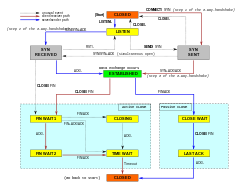

TCP is connection-oriented, meaning that sender and receiver firstly need to establish a connection based on agreed parameters; they do this through three-way handshake procedure.

In May 1974, Vint Cerf and Bob Kahn described an internetworking protocol for sharing resources using packet switching among network nodes.

A TCP connection is managed by an operating system through a resource that represents the local end-point for communications, the Internet socket.

[citation needed] Most implementations allocate an entry in a table that maps a session to a running operating system process.

[38] In the last two decades more packet reordering has been observed over the Internet[39] which led TCP implementations, such as the one in the Linux Kernel to adopt heuristic methods to scale the duplicate acknowledgment threshold.

The segment is retransmitted if the timer expires, with a new timeout threshold of twice the previous value, resulting in exponential backoff behavior.

[44] This guards against excessive transmission traffic due to faulty or malicious actors, such as man-in-the-middle denial of service attackers.

[45] In an environment with variable RTTs, spurious timeouts can occur:[46] if the RTT is under-estimated, then the RTO fires and triggers a needless retransmit and slow-start.

For example, senders must be careful when calculating RTT samples for retransmitted packets; typically they use Karn's Algorithm or TCP timestamps.

Enhancing TCP to reliably handle loss, minimize errors, manage congestion and go fast in very high-speed environments are ongoing areas of research and standards development.

For best performance, the MSS should be set small enough to avoid IP fragmentation, which can lead to packet loss and excessive retransmissions.

The option value is derived from the maximum transmission unit (MTU) size of the data link layer of the networks to which the sender and receiver are directly attached.

Relying purely on the cumulative acknowledgment scheme employed by the original TCP can lead to inefficiencies when packets are lost.

If it does so, the TCP sender will retransmit the segment previous to the out-of-order packet and slow its data delivery rate for that connection.

Impersonating a different IP address was not difficult prior to RFC 1948 when the initial sequence number was easily guessable.

An attacker who can eavesdrop and predict the size of the next packet to be sent can cause the receiver to accept a malicious payload without disrupting the existing connection.

TCPCT was designed due to necessities of DNSSEC, where servers have to handle large numbers of short-lived TCP connections.

The wire data of TCP provides significant information-gathering and modification opportunities to on-path observers, as the protocol metadata is transmitted in cleartext.

[107] Parallel connections also have congestion control operating independently of each other, rather than being able to pool information together and respond more promptly to observed network conditions;[108] TCP's aggressive initial sending patterns can cause congestion if multiple parallel connections are opened; and the per-connection fairness model leads to a monopolization of resources by applications that take this approach.

[115] TCP Fast Open allows the transmission of data in the initial (i.e., SYN and SYN-ACK) packets, removing one RTT of latency during connection establishment.

[116] However, TCP Fast Open has been difficult to deploy due to protocol ossification; as of 2020[update], no Web browsers used it by default.

Reordered packets can cause duplicate acknowledgments to be sent, which, if they cross a threshold, will then trigger a spurious retransmission and congestion control.

However, wireless links are known to experience sporadic and usually temporary losses due to fading, shadowing, hand off, interference, and other radio effects, that are not strictly congestion.

[125][126] A number of alternative congestion control algorithms, such as Vegas, Westwood, Veno, and Santa Cruz, have been proposed to help solve the wireless problem.

The acceleration node splits the feedback loop between the sender and the receiver and thus guarantees a shorter round trip time (RTT) per packet.

Finally, some tricks such as transmitting data between two hosts that are both behind NAT (using STUN or similar systems) are far simpler without a relatively complex protocol like TCP in the way.

If the environment is predictable, a timing-based protocol such as Asynchronous Transfer Mode (ATM) can avoid TCP's retransmits overhead.

UDP-based Data Transfer Protocol (UDT) has better efficiency and fairness than TCP in networks that have high bandwidth-delay product.