Timsort

The algorithm finds subsequences of the data that are already ordered (runs) and uses them to sort the remainder more efficiently.

Timsort has been Python's standard sorting algorithm since version 2.3 (since version 3.11 using the Powersort merge policy[5]), and is used to sort arrays of non-primitive type in Java SE 7,[6] on the Android platform,[7] in GNU Octave,[8] on V8,[9] and Swift.

[10] It uses techniques from Peter McIlroy's 1993 paper "Optimistic Sorting and Information Theoretic Complexity".

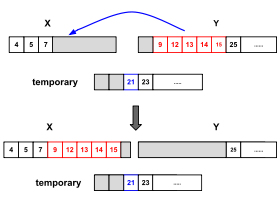

The original merge sort implementation is not in-place and it has a space overhead of N (data size).

In-place merge sort implementations exist, but have a high time overhead.

This optimization reduces the number of required element movements, the running time and the temporary space overhead in the general case.

The second stage performs a straight binary search of this region to find the exact location in R1 for x. Galloping mode is an attempt to adapt the merge algorithm to the pattern of intervals between elements in runs.

In some cases galloping mode requires more comparisons than a simple linear search.

Because merging is most efficient when the number of runs is equal to, or slightly less than, a power of two, and notably less efficient when the number of runs is slightly more than a power of two, Timsort chooses minrun to try to ensure the former condition.

[3] It is superior to Quicksort for sorting object references or pointers because these require expensive memory indirection to access data and perform comparisons and Quicksort's cache coherence benefits are greatly reduced.

In 2015, Dutch and German researchers in the EU FP7 ENVISAGE project found a bug in the standard implementation of Timsort.

The implementation preallocated a stack sufficient to sort 264 bytes of input, and avoided further overflow checks.

However, the guarantee requires the invariants to apply to every group of three consecutive runs, but the implementation only checked it for the top three.

[15] Using the KeY tool for formal verification of Java software, the researchers found that this check is not sufficient, and they were able to find run lengths (and inputs which generated those run lengths) which would result in the invariants being violated deeper in the stack after the top of the stack was merged.

The article also showed by formal methods how to establish the intended invariant by checking that the four topmost runs in the stack satisfy the two rules above.