TOP500

As of November 2024, the United States' El Capitan is the most powerful supercomputer in the TOP500, reaching 1742 petaFlops (1.742 exaFlops) on the LINPACK benchmarks.

[citation needed] The TOP500 list is compiled by Jack Dongarra of the University of Tennessee, Knoxville, Erich Strohmaier and Horst Simon of the National Energy Research Scientific Computing Center (NERSC) and Lawrence Berkeley National Laboratory (LBNL), and, until his death in 2014, Hans Meuer of the University of Mannheim, Germany.

After experimenting with metrics based on processor count in 1992, the idea arose at the University of Mannheim to use a detailed listing of installed systems as the basis.

For comparison, this is over 1,432,513 times faster than the Connection Machine CM-5/1024 (1,024 cores), which was the fastest system in November 1993 (twenty-five years prior) with an Rpeak of 131.0 GFLOPS.

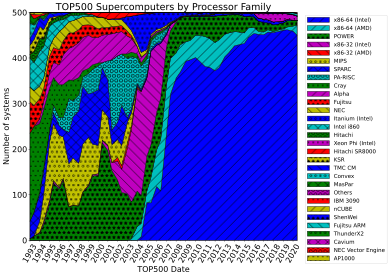

[citation needed] In recent years, heterogeneous computing has dominated the TOP500, mostly using Nvidia's graphics processing units (GPUs) or Intel's x86-based Xeon Phi as coprocessors.

[12] Before the ascendancy of 32-bit x86 and later 64-bit x86-64 in the early 2000s, a variety of RISC processor families made up most TOP500 supercomputers, including SPARC, MIPS, PA-RISC, and Alpha.

[17] It has been well over a decade since MIPS systems dropped entirely off the list[18] though the Gyoukou supercomputer that jumped to 4th place[19] in November 2017 had a MIPS-based design as a small part of the coprocessors.

Use of 2,048-core coprocessors (plus 8× 6-core MIPS, for each, that "no longer require to rely on an external Intel Xeon E5 host processor"[20]) made the supercomputer much more energy efficient than the other top 10 (i.e. it was 5th on Green500 and other such ZettaScaler-2.2-based systems take first three spots).

As of November 2024[update], the number one supercomputer is El Capitan, the leader on Green500 is JEDI, a Bull Sequana XH3000 system using the Nvidia Grace Hopper GH200 Superchip.

The United States has the highest aggregate computational power at 6,324 Petaflops Rmax with Japan second (919 Pflop/s) and Germany third (396 Pflop/s).

[79] On 10 April 2015, US government agencies banned selling chips, from Nvidia to supercomputing centers in China as "acting contrary to the national security ... interests of the United States";[80] and Intel Corporation from providing Xeon chips to China due to their use, according to the US, in researching nuclear weapons – research to which US export control law bans US companies from contributing – "The Department of Commerce refused, saying it was concerned about nuclear research being done with the machine.

The company has registered over $10B in revenue and has provided a number of systems to countries such as Sudan, Zimbabwe, Saudi Arabia and Venezuela.

Inspur was also a major technology partner behind both the Tianhe-2 and Taihu supercomputers, occupying the top 2 positions of the TOP500 list up until November 2017.

[88] On 18 March 2019, the United States Department of Energy and Intel announced the first exaFLOP supercomputer would be operational at Argonne National Laboratory by the end of 2021.

[89][90] On 7 May 2019, The U.S. Department of Energy announced a contract with Cray to build the "Frontier" supercomputer at Oak Ridge National Laboratory.

[92] In May 2022, the Frontier supercomputer broke the exascale barrier, completing more than a quintillion 64-bit floating point arithmetic calculations per second.

A prominent example is the NCSA's Blue Waters which publicly announced the decision not to participate in the list[95] because they do not feel it accurately indicates the ability of any system to do useful work.

A Google Tensor Processing Unit v4 pod is capable of 1.1 exaflops of peak performance,[98] while TPU v5p claims over 4 exaflops in Bfloat16 floating-point format,[99] however these units are highly specialized to run machine learning workloads and the TOP500 measures a specific benchmark algorithm using a specific numeric precision.

Tesla Dojo's primary unnamed cluster using 5,760 Nvidia A100 graphics processing units (GPUs) was touted by Andrej Karpathy in 2021 at the fourth International Joint Conference on Computer Vision and Pattern Recognition (CCVPR 2021) to be "roughly the number five supercomputer in the world"[100] at approximately 81.6 petaflops, based on scaling the performance of the Nvidia Selene supercomputer, which uses similar components.

[101] In March 2024, Meta AI disclosed the operation of two datacenters with 24,576 H100 GPUs,[102] which is almost 2x as on the Microsoft Azure Eagle (#3 as of September 2024), which could have made them occupy 3rd and 4th places in TOP500, but neither have been benchmarked.

[103] xAI Memphis Supercluster (also known as "Colossus") allegedly features 100,000 of the same H100 GPUs, which could have put in on the first place, but it is reportedly not in full operation due to power shortages.