Transpose

For avoiding a possible confusion, many authors use left upperscripts, that is, they denote the transpose as TA.

For example, software libraries for linear algebra, such as BLAS, typically provide options to specify that certain matrices are to be interpreted in transposed order to avoid the necessity of data movement.

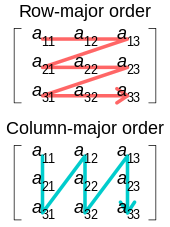

However, there remain a number of circumstances in which it is necessary or desirable to physically reorder a matrix in memory to its transposed ordering.

If repeated operations need to be performed on the columns, for example in a fast Fourier transform algorithm, transposing the matrix in memory (to make the columns contiguous) may improve performance by increasing memory locality.

For n ≠ m, this involves a complicated permutation of the data elements that is non-trivial to implement in-place.

Therefore, efficient in-place matrix transposition has been the subject of numerous research publications in computer science, starting in the late 1950s, and several algorithms have been developed.

[10] The definition of the transpose may be seen to be independent of any bilinear form on the modules, unlike the adjoint (below).

If X and Y are TVSs then a linear map u : X → Y is weakly continuous if and only if u#(Y') ⊆ X', in which case we let tu : Y' → X' denote the restriction of u# to Y'.

Every linear map to the dual space u : X → X# defines a bilinear form B : X × X → F, with the relation B(x, y) = u(x)(y).

In particular, this allows the orthogonal group over a vector space X with a quadratic form to be defined without reference to matrices (nor the components thereof) as the set of all linear maps X → X for which the adjoint equals the inverse.