Trolley problem

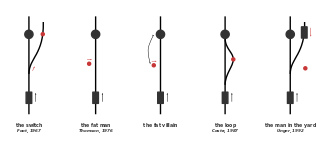

[1] Later dubbed "the trolley problem" by Judith Jarvis Thomson in a 1976 article that catalyzed a large literature, the subject refers to the meta-problem of why different judgments are arrived at in particular instances.

[8][9] In his commentary on the Talmud, published long before his death in 1953, Avrohom Yeshaya Karelitz considered the question of whether it is ethical to deflect a projectile from a larger crowd toward a smaller one.

[12] Trolley-style scenarios also arise in discussing the ethics of autonomous vehicle design, which may require programming to choose whom or what to strike when a collision appears to be unavoidable.

[14] Foot's version of the thought experiment, now known as "Trolley Driver", ran as follows: Suppose that a judge or magistrate is faced with rioters demanding that a culprit be found for a certain crime and threatening otherwise to take their own bloody revenge on a particular section of the community.

In 2001, Joshua Greene and colleagues published the results of the first significant empirical investigation of people's responses to trolley problems.

[16] Using functional magnetic resonance imaging, they demonstrated that "personal" dilemmas (like pushing a man off a footbridge) preferentially engage brain regions associated with emotion, whereas "impersonal" dilemmas (like diverting the trolley by flipping a switch) preferentially engaged regions associated with controlled reasoning.

Since then, numerous other studies have employed trolley problems to study moral judgment, investigating topics like the role and influence of stress,[17] emotional state,[18] impression management,[19] levels of anonymity,[20] different types of brain damage,[21] physiological arousal,[22] different neurotransmitters,[23] and genetic factors[24] on responses to trolley dilemmas.

Scruton writes, "These 'dilemmas' have the useful character of eliminating from the situation just about every morally relevant relationship and reducing the problem to one of arithmetic alone."

[39] Unlike in the previous scenario, pushing the fat villain to stop the trolley may be seen as a form of retributive justice or self-defense.

Variants of the original Trolley Driver dilemma arise in the design of software to control autonomous cars.

[13] Situations are anticipated where a potentially fatal collision appears to be unavoidable, but in which choices made by the car's software, such as into whom or what to crash, can affect the particulars of the deadly outcome.

[40][41][42][43] A platform called Moral Machine[44] was created by MIT Media Lab to allow the public to express their opinions on what decisions autonomous vehicles should make in scenarios that use the trolley problem paradigm.

It would need to be a top-down plan in order to fit the current approaches of addressing emergencies in artificial intelligence.

According to Gogoll and Müller, "the reason is, simply put, that [personalized ethics settings] would most likely result in a prisoner’s dilemma.

[52][53] The commission adopted 20 rules to be implemented in the laws that will govern the ethical choices that autonomous vehicles will make.

However, they cannot be standardized to a complex or intuitive assessment of the impacts of an accident in such a way that they can replace or anticipate the decision of a responsible driver with the moral capacity to make correct judgements.

Such legal judgements, made in retrospect and taking special circumstances into account, cannot readily be transformed into abstract/general ex ante appraisals and thus also not into corresponding programming activities.