Boolean satisfiability problem

Nevertheless, as of 2007, heuristic SAT-algorithms are able to solve problem instances involving tens of thousands of variables and formulas consisting of millions of symbols,[1] which is sufficient for many practical SAT problems from, e.g., artificial intelligence, circuit design,[2] and automatic theorem proving.

A propositional logic formula, also called Boolean expression, is built from variables, operators AND (conjunction, also denoted by ∧), OR (disjunction, ∨), NOT (negation, ¬), and parentheses.

For some versions of the SAT problem, it is useful to define the notion of a generalized conjunctive normal form formula, viz.

as a conjunction of arbitrarily many generalized clauses, the latter being of the form R(l1,...,ln) for some Boolean function R and (ordinary) literals li.

Using the laws of Boolean algebra, every propositional logic formula can be transformed into an equivalent conjunctive normal form, which may, however, be exponentially longer.

SAT was the first problem known to be NP-complete, as proved by Stephen Cook at the University of Toronto in 1971[8] and independently by Leonid Levin at the Russian Academy of Sciences in 1973.

The formula resulting from transforming all clauses is at most 3 times as long as its original; that is, the length growth is polynomial.

[11] There is a simple randomized algorithm due to Schöning (1999) that runs in time (4/3)n where n is the number of variables in the 3-SAT proposition, and succeeds with high probability to correctly decide 3-SAT.

Selman, Mitchell, and Levesque (1996) give empirical data on the difficulty of randomly generated 3-SAT formulas, depending on their size parameters.

They identified a phase transition region from almost-certainly-satisfiable to almost-certainly-unsatisfiable formulas at the clauses-to-variables ratio at about 4.26.

Conjunctive normal form (in particular with 3 literals per clause) is often considered the canonical representation for SAT formulas.

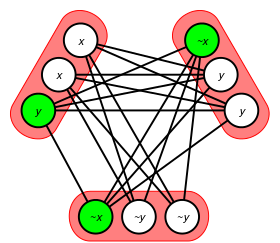

Then the formula R(x,a,d) ∧ R(y,b,d) ∧ R(a,b,e) ∧ R(c,d,f) ∧ R(z,c,FALSE) is satisfiable by some setting of the fresh variables if and only if at least one of x, y, or z is TRUE, see picture (left).

[16] SAT is easier if the number of literals in a clause is limited to at most 2, in which case the problem is called 2-SAT.

This problem can be solved in polynomial time, and in fact is complete for the complexity class NL.

If additionally all OR operations in literals are changed to XOR operations, then the result is called exclusive-or 2-satisfiability, which is a problem complete for the complexity class SL = L. The problem of deciding the satisfiability of a given conjunction of Horn clauses is called Horn-satisfiability, or HORN-SAT.

Another special case is the class of problems where each clause contains XOR (i.e. exclusive or) rather than (plain) OR operators.

[e] This is in P, since an XOR-SAT formula can also be viewed as a system of linear equations mod 2, and can be solved in cubic time by Gaussian elimination;[18] see the box for an example.

Provided that the complexity classes P and NP are not equal, neither 2-, nor Horn-, nor XOR-satisfiability is NP-complete, unlike SAT.

Schaefer's dichotomy theorem states that, for any restriction to Boolean functions that can be used to form these subformulas, the corresponding satisfiability problem is in P or NP-complete.

Such extensions typically remain NP-complete, but very efficient solvers are now available that can handle many such kinds of constraints.

The satisfiability problem becomes more difficult if both "for all" (∀) and "there exists" (∃) quantifiers are allowed to bind the Boolean variables.

A variety of variants deal with the number of such assignments: Other generalizations include satisfiability for first- and second-order logic, constraint satisfaction problems, 0-1 integer programming.

That is, each algorithm which correctly answers whether an instance of SAT is solvable can be used to find a satisfying assignment.

In spite of this, efficient and scalable algorithms for SAT were developed during the 2000s and have contributed to dramatic advances in the ability to automatically solve problem instances involving tens of thousands of variables and millions of constraints (i.e.

[1] Examples of such problems in electronic design automation (EDA) include formal equivalence checking, model checking, formal verification of pipelined microprocessors,[19] automatic test pattern generation, routing of FPGAs,[27] planning, and scheduling problems, and so on.

A SAT-solving engine is also considered to be an essential component in the electronic design automation toolbox.

Almost all SAT solvers include time-outs, so they will terminate in reasonable time even if they cannot find a solution.

[29] Modern SAT solvers are also having significant impact on the fields of software verification, constraint solving in artificial intelligence, and operations research, among others.