Probability

Probability is the branch of mathematics and statistics concerning events and numerical descriptions of how likely they are to occur.

These concepts have been given an axiomatic mathematical formalization in probability theory, which is used widely in areas of study such as statistics, mathematics, science, finance, gambling, artificial intelligence, machine learning, computer science, game theory, and philosophy to, for example, draw inferences about the expected frequency of events.

In a sense, this differs much from the modern meaning of probability, which in contrast is a measure of the weight of empirical evidence, and is arrived at from inductive reasoning and statistical inference.

[4] When dealing with random experiments – i.e., experiments that are random and well-defined – in a purely theoretical setting (like tossing a coin), probabilities can be numerically described by the number of desired outcomes, divided by the total number of all outcomes.

For example, tossing a coin twice will yield "head-head", "head-tail", "tail-head", and "tail-tail" outcomes.

Gambling shows that there has been an interest in quantifying the ideas of probability throughout history, but exact mathematical descriptions arose much later.

Whereas games of chance provided the impetus for the mathematical study of probability, fundamental issues [note 2] are still obscured by superstitions.

[11] According to Richard Jeffrey, "Before the middle of the seventeenth century, the term 'probable' (Latin probabilis) meant approvable, and was applied in that sense, univocally, to opinion and to action.

Aside from the elementary work by Cardano, the doctrine of probabilities dates to the correspondence of Pierre de Fermat and Blaise Pascal (1654).

[15] Jakob Bernoulli's Ars Conjectandi (posthumous, 1713) and Abraham de Moivre's Doctrine of Chances (1718) treated the subject as a branch of mathematics.

"It is difficult historically to attribute that law to Gauss, who in spite of his well-known precocity had probably not made this discovery before he was two years old.

"[19] Daniel Bernoulli (1778) introduced the principle of the maximum product of the probabilities of a system of concurrent errors.

Adrien-Marie Legendre (1805) developed the method of least squares, and introduced it in his Nouvelles méthodes pour la détermination des orbites des comètes (New Methods for Determining the Orbits of Comets).

[20] In ignorance of Legendre's contribution, an Irish-American writer, Robert Adrain, editor of "The Analyst" (1808), first deduced the law of facility of error,

In the nineteenth century, authors on the general theory included Laplace, Sylvestre Lacroix (1816), Littrow (1833), Adolphe Quetelet (1853), Richard Dedekind (1860), Helmert (1872), Hermann Laurent (1873), Liagre, Didion and Karl Pearson.

[22] On the geometric side, contributors to The Educational Times included Miller, Crofton, McColl, Wolstenholme, Watson, and Artemas Martin.

These formal terms are manipulated by the rules of mathematics and logic, and any results are interpreted or translated back into the problem domain.

The insurance industry and markets use actuarial science to determine pricing and make trading decisions.

The theory of behavioral finance emerged to describe the effect of such groupthink on pricing, on policy, and on peace and conflict.

[25] As with finance, risk assessment can be used as a statistical tool to calculate the likelihood of undesirable events occurring, and can assist with implementing protocols to avoid encountering such circumstances.

Probability is used to design games of chance so that casinos can make a guaranteed profit, yet provide payouts to players that are frequent enough to encourage continued play.

The power set of the sample space is formed by considering all different collections of possible results.

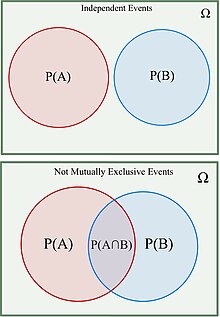

If two events A and B occur on a single performance of an experiment, this is called the intersection or joint probability of A and B, denoted as

In the case of a roulette wheel, if the force of the hand and the period of that force are known, the number on which the ball will stop would be a certainty (though as a practical matter, this would likely be true only of a roulette wheel that had not been exactly levelled – as Thomas A. Bass' Newtonian Casino revealed).

This also assumes knowledge of inertia and friction of the wheel, weight, smoothness, and roundness of the ball, variations in hand speed during the turning, and so forth.

A probabilistic description can thus be more useful than Newtonian mechanics for analyzing the pattern of outcomes of repeated rolls of a roulette wheel.

Physicists face the same situation in the kinetic theory of gases, where the system, while deterministic in principle, is so complex (with the number of molecules typically the order of magnitude of the Avogadro constant 6.02×1023) that only a statistical description of its properties is feasible.

Albert Einstein famously remarked in a letter to Max Born: "I am convinced that God does not play dice".

[37] Like Einstein, Erwin Schrödinger, who discovered the wave function, believed quantum mechanics is a statistical approximation of an underlying deterministic reality.

[38] In some modern interpretations of the statistical mechanics of measurement, quantum decoherence is invoked to account for the appearance of subjectively probabilistic experimental outcomes.