Adaptive filter

An adaptive filter is a system with a linear filter that has a transfer function controlled by variable parameters and a means to adjust those parameters according to an optimization algorithm.

Adaptive filters are required for some applications because some parameters of the desired processing operation (for instance, the locations of reflective surfaces in a reverberant space) are not known in advance or are changing.

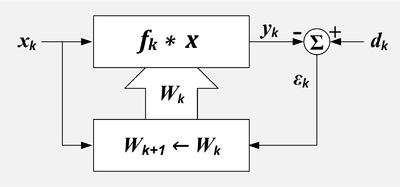

The closed loop adaptive filter uses feedback in the form of an error signal to refine its transfer function.

Generally speaking, the closed loop adaptive process involves the use of a cost function, which is a criterion for optimum performance of the filter, to feed an algorithm, which determines how to modify filter transfer function to minimize the cost on the next iteration.

The most common cost function is the mean square of the error signal.

As the power of digital signal processors has increased, adaptive filters have become much more common and are now routinely used in devices such as mobile phones and other communication devices, camcorders and digital cameras, and medical monitoring equipment.

The recording of a heart beat (an ECG), may be corrupted by noise from the AC mains.

One way to remove the noise is to filter the signal with a notch filter at the mains frequency and its vicinity, but this could excessively degrade the quality of the ECG since the heart beat would also likely have frequency components in the rejected range.

To circumvent this potential loss of information, an adaptive filter could be used.

Such an adaptive technique generally allows for a filter with a smaller rejection range, which means, in this case, that the quality of the output signal is more accurate for medical purposes.

[4](Widrow) The input signals are defined as follows: The output signals are defined as follows: If the variable filter has a tapped delay line Finite Impulse Response (FIR) structure, then the impulse response is equal to the filter coefficients.

The output of the variable filter in the ideal case is The error signal or cost function is the difference between

This formula means that the output signal to interference ratio at a particular frequency is the reciprocal of the reference signal to interference ratio.

Before getting to the window, customers place their order by speaking into a microphone.

In this case, the engine noise is 50 times more powerful than the customer's voice.

Once the canceler has converged, the primary signal to interference ratio will be improved from 1:1 to 50:1.

The adaptive linear combiner (ALC) resembles the adaptive tapped delay line FIR filter except that there is no assumed relationship between the X values.

If the X values were from the outputs of a tapped delay line, then the combination of tapped delay line and ALC would comprise an adaptive filter.

The ALC finds use as an adaptive beam former for arrays of hydrophones or antennas.

If the variable filter has a tapped delay line FIR structure, then the LMS update algorithm is especially simple.

Typically, after each sample, the coefficients of the FIR filter are adjusted as follows:[6] The LMS algorithm does not require that the X values have any particular relationship; therefore it can be used to adapt a linear combiner as well as an FIR filter.

In this case the update formula is written as: The effect of the LMS algorithm is at each time, k, to make a small change in each weight.

The direction of the change is such that it would decrease the error if it had been applied at time k. The magnitude of the change in each weight depends on μ, the associated X value and the error at time k. The weights making the largest contribution to the output,

μ controls how fast and how well the algorithm converges to the optimum filter coefficients.

If μ is too small the algorithm converges slowly and may not be able to track changing conditions.

If μ is large but not too large to prevent convergence, the algorithm reaches steady state rapidly but continuously overshoots the optimum weight vector.

Sometimes, μ is made large at first for rapid convergence and then decreased to minimize overshoot.

Widrow and Stearns state in 1985 that they have no knowledge of a proof that the LMS algorithm will converge in all cases.

[7] However under certain assumptions about stationarity and independence it can be shown that the algorithm will converge if In the case of the tapped delay line filter, each input has the same RMS value because they are simply the same values delayed.

In this case the total power is This leads to a normalized LMS algorithm: The goal of nonlinear filters is to overcome limitation of linear models.