Base rate fallacy

These terms were introduced by William C. Thompson and Edward Schumann in 1987,[4][5] although it has been argued that their definition of the prosecutor's fallacy extends to many additional invalid imputations of guilt or liability that are not analyzable as errors in base rates or Bayes's theorem.

Imagine that a group of police officers have breathalyzers displaying false drunkenness in 5% of the cases in which the driver is sober.

In an attempt to catch the terrorists, the city installs an alarm system with a surveillance camera and automatic facial recognition software.

Multiple practitioners have argued that as the base rate of terrorism is extremely low, using data mining and predictive algorithms to identify terrorists cannot feasibly work due to the false positive paradox.

[9][10][11][12] Estimates of the number of false positives for each accurate result vary from over ten thousand[12] to one billion;[10] consequently, investigating each lead would be cost- and time-prohibitive.

Foremost, the low base rate of terrorism also means there is a lack of data with which to make an accurate algorithm.

[12] It is also questionable whether the use of such models by law enforcement would meet the requisite burden of proof given that over 99% of results would be false positives.

A prosecutor might charge the suspect with the crime on that basis alone, and claim at trial that the probability that the defendant is guilty is 90%.

However, this conclusion is only close to correct if the defendant was selected as the main suspect based on robust evidence discovered prior to the blood test and unrelated to it.

Whilst the former is usually small (10% in the previous example) due to good forensic evidence procedures, the latter (99% in that example) does not directly relate to it and will often be much higher, since, in fact, it depends on the likely quite high prior odds of the defendant being a random innocent person.

However, the defense argued that the number of people from Los Angeles matching the sample could fill a football stadium and that the figure of 1 in 400 was useless.

[14][15] It would have been incorrect, and an example of prosecutor's fallacy, to rely solely on the "1 in 400" figure to deduce that a given person matching the sample would be likely to be the culprit.

The defense argued that there was only one woman murdered for every 2500 women who were subjected to spousal abuse, and that any history of Simpson being violent toward his wife was irrelevant to the trial.

The prosecution had expert witness Sir Roy Meadow, a professor and consultant paediatrician,[17] testify that the probability of two children in the same family dying from SIDS is about 1 in 73 million.

Given that two deaths had occurred, one of the following explanations must be true, and all of them are a priori extremely improbable: It is unclear whether an estimate of the probability for the second possibility was ever proposed during the trial, or whether the comparison of the first two probabilities was understood to be the key estimate to make in the statistical analysis assessing the prosecution's case against the case for innocence.

Clark was convicted in 1999, resulting in a press release by the Royal Statistical Society which pointed out the mistakes.

Psychologists Daniel Kahneman and Amos Tversky attempted to explain this finding in terms of a simple rule or "heuristic" called representativeness.

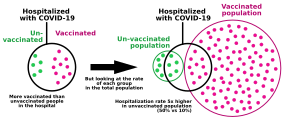

[30][31] Researchers in the heuristics-and-biases program have stressed empirical findings showing that people tend to ignore base rates and make inferences that violate certain norms of probabilistic reasoning, such as Bayes' theorem.

The conclusion drawn from this line of research was that human probabilistic thinking is fundamentally flawed and error-prone.

[32] Other researchers have emphasized the link between cognitive processes and information formats, arguing that such conclusions are not generally warranted.

The required inference is to estimate the (posterior) probability that a (randomly picked) driver is drunk, given that the breathalyzer test is positive.

Empirical studies show that people's inferences correspond more closely to Bayes' rule when information is presented this way, helping to overcome base-rate neglect in laypeople[34] and experts.

[35] As a consequence, organizations like the Cochrane Collaboration recommend using this kind of format for communicating health statistics.

[37] It has also been shown that graphical representations of natural frequencies (e.g., icon arrays, hypothetical outcome plots) help people to make better inferences.

This can be seen when using an alternative way of computing the required probability p(drunk|D): where N(drunk ∩ D) denotes the number of drivers that are drunk and get a positive breathalyzer result, and N(D) denotes the total number of cases with a positive breathalyzer result.