Binary logarithm

Historically, the first application of binary logarithms was in music theory, by Leonhard Euler: the binary logarithm of a frequency ratio of two musical tones gives the number of octaves by which the tones differ.

In computer science, they count the number of steps needed for binary search and related algorithms.

Other areas in which the binary logarithm is frequently used include combinatorics, bioinformatics, the design of sports tournaments, and photography.

IX.32 (on the factorization of powers of two) and IX.36 (half of the Euclid–Euler theorem, on the structure of even perfect numbers).

On this basis, Michael Stifel has been credited with publishing the first known table of binary logarithms in 1544.

[1][2] Earlier than Stifel, the 8th century Jain mathematician Virasena is credited with a precursor to the binary logarithm.

[4] The modern form of a binary logarithm, applying to any number (not just powers of two) was considered explicitly by Leonhard Euler in 1739.

As part of his work in this area, Euler published a table of binary logarithms of the integers from 1 to 8, to seven decimal digits of accuracy.

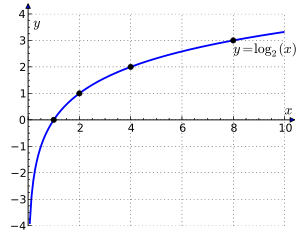

In mathematics, the binary logarithm of a number n is often written as log2 n.[10] However, several other notations for this function have been used or proposed, especially in application areas.

Some authors write the binary logarithm as lg n,[11][12] the notation listed in The Chicago Manual of Style.

[20] The DIN 1302 [de], ISO 31-11 and ISO 80000-2 standards recommend yet another notation, lb n. According to these standards, lg n should not be used for the binary logarithm, as it is instead reserved for the common logarithm log10 n.[23][24][25] The number of digits (bits) in the binary representation of a positive integer n is the integral part of 1 + log2 n, i.e.[12] In information theory, the definition of the amount of self-information and information entropy is often expressed with the binary logarithm, corresponding to making the bit the fundamental unit of information.

With these units, the Shannon–Hartley theorem expresses the information capacity of a channel as the binary logarithm of its signal-to-noise ratio, plus one.

For example, in binary search, the size of the problem to be solved is halved with each iteration, and therefore roughly log2 n iterations are needed to obtain a solution for a problem of size n.[34] Similarly, a perfectly balanced binary search tree containing n elements has height log2(n + 1) − 1.

[43] The occurrence of binary logarithms in these running times can be explained by reference to the master theorem for divide-and-conquer recurrences.

In bioinformatics, microarrays are used to measure how strongly different genes are expressed in a sample of biological material.

[44] Data points obtained in this way are often visualized as a scatterplot in which one or both of the coordinate axes are binary logarithms of intensity ratios, or in visualizations such as the MA plot and RA plot that rotate and scale these log ratio scatterplots.

[45] In music theory, the interval or perceptual difference between two tones is determined by the ratio of their frequencies.

Intervals coming from rational number ratios with small numerators and denominators are perceived as particularly euphonious.

The number of octaves by which two tones differ is the binary logarithm of their frequency ratio.

[46] To study tuning systems and other aspects of music theory that require finer distinctions between tones, it is helpful to have a measure of the size of an interval that is finer than an octave and is additive (as logarithms are) rather than multiplicative (as frequency ratios are).

Mathematically, given tones with frequencies f1 and f2, the number of cents in the interval from f1 to f2 is[46] The millioctave is defined in the same way, but with a multiplier of 1000 instead of 1200.

[48] In photography, exposure values are measured in terms of the binary logarithm of the amount of light reaching the film or sensor, in accordance with the Weber–Fechner law describing a logarithmic response of the human visual system to light.

These two forms of integer binary logarithm are related by this formula: The definition can be extended by defining

Extended in this way, this function is related to the number of leading zeros of the 32-bit unsigned binary representation of x, nlz(x).

The integer binary logarithm can be interpreted as the zero-based index of the most significant 1 bit in the input.

The fls and flsl functions in the Linux kernel[57] and in some versions of the libc software library also compute the binary logarithm (rounded up to an integer, plus one).

For a general positive real number, the binary logarithm may be computed in two parts.

[58] The algorithm for computing the fractional part can be described in pseudocode as follows: The result of this is expressed by the following recursive formulas, in which

In the special case where the fractional part in step 1 is found to be zero, this is a finite sequence terminating at some point.

Otherwise, it is an infinite series that converges according to the ratio test, since each term is strictly less than the previous one (since every mi > 0).