Chatbot

Although chatbots have existed since the late 1960s, the field gained widespread attention in the early 2020s due to the popularity of OpenAI's ChatGPT,[5][6] followed by alternatives such as Microsoft's Copilot and Google's Gemini.

[9] Companies spanning a wide range of industries have begun using the latest generative artificial intelligence technologies to power more advanced developments in such areas.

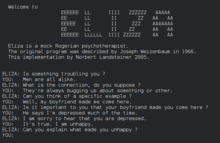

The notoriety of Turing's proposed test stimulated great interest in Joseph Weizenbaum's program ELIZA, published in 1966, which seemed to be able to fool users into believing that they were conversing with a real human.

ELIZA showed that such an illusion is surprisingly easy to generate because human judges are ready to give the benefit of the doubt when conversational responses are capable of being interpreted as "intelligent".

This sort of usage holds the prospect of moving chatbot technology from Weizenbaum's "shelf ... reserved for curios" to that marked "genuinely useful computational methods".

While ELIZA and PARRY were used exclusively to simulate typed conversation, many chatbots now include other functional features, such as games and web searching abilities.

In 1984, a book called The Policeman's Beard is Half Constructed was published, allegedly written by the chatbot Racter (though the program as released would not have been capable of doing so).

The program was unable to process the news items subsequent to the surprise resignation of Cyrus Vance in April 1980, and the team constructed another chatbot simulating his successor, Edmund Muskie.

uses a markup language called AIML,[3] which is specific to its function as a conversational agent, and has since been adopted by various other developers of, so-called, Alicebots.

Some more recent chatbots also combine real-time learning with evolutionary algorithms that optimize their ability to communicate based on each conversation held.

Despite criticism of its accuracy and tendency to "hallucinate"—that is, to confidently output false information and even cite non-existent sources—ChatGPT has gained attention for its detailed responses and historical knowledge.

[38][39] The newer generation of chatbots includes IBM Watson-powered "Rocky", introduced in February 2017 by the New York City-based e-commerce company Rare Carat to provide information to prospective diamond buyers.

Overstock.com, for one, has reportedly launched a chatbot named Mila to attempt to automate certain processes when customer service employees request sick leave.

[43] Deep learning techniques can be incorporated into chatbot applications to allow them to map conversations between users and customer service agents, especially in social media.

[46] In 2016, Russia-based Tochka Bank launched a chatbot on Facebook for a range of financial services, including a possibility of making payments.

[48] In 2023, US-based National Eating Disorders Association replaced its human helpline staff with a chatbot but had to take it offline after users reported receiving harmful advice from it.

[52][53] A study suggested that physicians in the United States believed that chatbots would be most beneficial for scheduling doctor appointments, locating health clinics, or providing medication information.

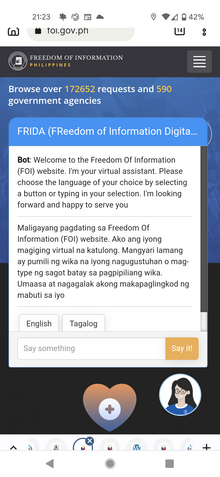

[56] In 2020, the Government of India launched a chatbot called MyGov Corona Helpdesk,[57] that worked through WhatsApp and helped people access information about the Coronavirus (COVID-19) pandemic.

A mixed-methods 2019 study showed that people are still hesitant to use chatbots for their healthcare due to poor understanding of the technological complexity, the lack of empathy, and concerns about cyber-security.

[70] In India, the state government has launched a chatbot for its Aaple Sarkar platform,[71] which provides conversational access to information regarding public services managed.

[78] Malicious chatbots are frequently used to fill chat rooms with spam and advertisements by mimicking human behavior and conversations or to entice people into revealing personal information, such as bank account numbers.

[79] Tay, an AI chatbot designed to learn from previous interaction, caused major controversy due to it being targeted by internet trolls on Twitter.

Soon after its launch, the bot was exploited, and with its "repeat after me" capability, it started releasing racist, sexist, and controversial responses to Twitter users.

Therefore, human-seeming chatbots with well-crafted online identities could start scattering fake news that seems plausible, for instance making false claims during an election.

Storage of user data and past communication, that is highly valuable for training and development of chatbots, can also give rise to security threats.

But advanced chatbots like ChatGPT are also targeting high-paying, creative, and knowledge-based jobs, raising concerns about workforce disruption and quality trade-offs in favor of cost-cutting.

[89] Chatbots are increasingly used by small and medium enterprises, to handle customer interactions efficiently, reducing reliance on large call centers and lowering operational costs.