Latent Dirichlet allocation

In the context of population genetics, LDA was proposed by J. K. Pritchard, M. Stephens and P. Donnelly in 2000.

[1][2] LDA was applied in machine learning by David Blei, Andrew Ng and Michael I. Jordan in 2003.

[3] In evolutionary biology and bio-medicine, the model is used to detect the presence of structured genetic variation in a group of individuals.

The model assumes that alleles carried by individuals under study have origin in various extant or past populations.

In association studies, detecting the presence of genetic structure is considered a necessary preliminary step to avoid confounding.

In clinical psychology research, LDA has been used to identify common themes of self-images experienced by young people in social situations.

This approach allows for the integration of text data as predictors in statistical regression analyses, improving the accuracy of mental health predictions.

One of the main advantages of SLDAX over traditional two-stage approaches is its ability to avoid biased estimates and incorrect standard errors, allowing for a more accurate analysis of psychological texts.

For instance, researchers have used LDA to investigate tweets discussing socially relevant topics, like the use of prescription drugs.

By analyzing these large text corpora, it is possible to uncover patterns and themes that might otherwise go unnoticed, offering valuable insights into public discourse and perception in real time.

[8][9] In the context of computational musicology, LDA has been used to discover tonal structures in different corpora.

A topic is considered to be a set of terms (i.e., individual words or phrases) that, taken together, suggest a shared theme.

For example, in a document collection related to pet animals, the terms dog, spaniel, beagle, golden retriever, puppy, bark, and woof would suggest a DOG_related theme, while the terms cat, siamese, Maine coon, tabby, manx, meow, purr, and kitten would suggest a CAT_related theme.

(Very common, so called stop words in a language – e.g., "the", "an", "that", "are", "is", etc., – would not discriminate between topics and are usually filtered out by pre-processing before LDA is performed.

While both methods are similar in principle and require the user to specify the number of topics to be discovered before the start of training (as with K-means clustering) LDA has the following advantages over pLSA:

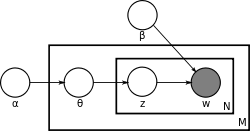

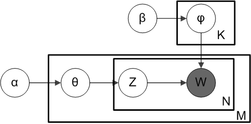

With plate notation, which is often used to represent probabilistic graphical models (PGMs), the dependencies among the many variables can be captured concisely.

As proposed in the original paper,[3] a sparse Dirichlet prior can be used to model the topic-word distribution, following the intuition that the probability distribution over words in a topic is skewed, so that only a small set of words have high probability.

as matrices created by decomposing the original document-word matrix that represents the corpus of documents being modeled.

The original paper by Pritchard et al.[1] used approximation of the posterior distribution by Monte Carlo simulation.

[12] The original ML paper used a variational Bayes approximation of the posterior distribution.

[3] A direct optimization of the likelihood with a block relaxation algorithm proves to be a fast alternative to MCMC.

It can be estimated by approximation of the posterior distribution with reversible-jump Markov chain Monte Carlo.

The update equation of the collapsed Gibbs sampler mentioned in the earlier section has a natural sparsity within it that can be taken advantage of.

Topic modeling is a classic solution to the problem of information retrieval using linked data and semantic web technology.

Another extension is the hierarchical LDA (hLDA),[19] where topics are joined together in a hierarchy by using the nested Chinese restaurant process, whose structure is learnt from data.

LDA can also be extended to a corpus in which a document includes two types of information (e.g., words and names), as in the LDA-dual model.

[20] Nonparametric extensions of LDA include the hierarchical Dirichlet process mixture model, which allows the number of topics to be unbounded and learnt from data.

—the probability of words under topics—to be that learned from the training set and use the same EM algorithm to infer

Blei argues that this step is cheating because you are essentially refitting the model to the new data.

In evolutionary biology, it is often natural to assume that the geographic locations of the individuals observed bring some information about their ancestry.