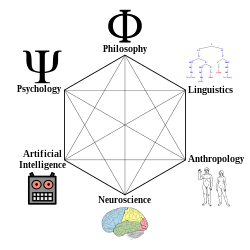

Cognitive science

Cognitive science has a prehistory traceable back to ancient Greek philosophical texts (see Plato's Meno and Aristotle's De Anima); Modern philosophers such as Descartes, David Hume, Immanuel Kant, Benedict de Spinoza, Nicolas Malebranche, Pierre Cabanis, Leibniz and John Locke, rejected scholasticism while mostly having never read Aristotle, and they were working with an entirely different set of tools and core concepts than those of the cognitive scientist.

[citation needed] The modern culture of cognitive science can be traced back to the early cyberneticists in the 1930s and 1940s, such as Warren McCulloch and Walter Pitts, who sought to understand the organizing principles of the mind.

The modern computer, or Von Neumann machine, would play a central role in cognitive science, both as a metaphor for the mind, and as a tool for investigation.

[4] The first instance of cognitive science experiments being done at an academic institution took place at MIT Sloan School of Management, established by J.C.R.

[citation needed] The term cognitive science was coined by Christopher Longuet-Higgins in his 1973 commentary on the Lighthill report, which concerned the then-current state of artificial intelligence research.

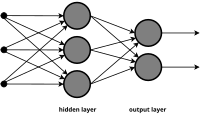

Under this point of view, often attributed to James McClelland and David Rumelhart, the mind could be characterized as a set of complex associations, represented as a layered network.

[13][14][15][16][17][18][19] Connectionism has proven useful for exploring computationally how cognition emerges in development and occurs in the human brain, and has provided alternatives to strictly domain-specific / domain general approaches.

For example, scientists such as Jeff Elman, Liz Bates, and Annette Karmiloff-Smith have posited that networks in the brain emerge from the dynamic interaction between them and environmental input.

Another approach to measure cognitive ability would be to study the firings of individual neurons while a person is trying to remember the phone number.

Classical cognitivists have largely de-emphasized or avoided social and cultural factors, embodiment, emotion, consciousness, animal cognition, and comparative and evolutionary psychologies.

With the newfound emphasis on information processing, observable behavior was no longer the hallmark of psychological theory, but the modeling or recording of mental states.

There is some debate in the field as to whether the mind is best viewed as a huge array of small but individually feeble elements (i.e. neurons), or as a collection of higher-level structures such as symbols, schemes, plans, and rules.

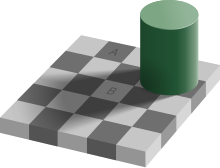

Experiments that support this metaphor include the dichotic listening task (Cherry, 1957) and studies of inattentional blindness (Mack and Rock, 1998).

[citation needed] The psychological construct of Attention is sometimes confused with the concept of Intentionality due to some degree of semantic ambiguity in their definitions.

At the beginning of experimental research on Attention, Wilhelm Wundt defined this term as "that psychical process, which is operative in the clear perception of the narrow region of the content of consciousness.

"[29] His experiments showed the limits of Attention in space and time, which were 3-6 letters during an exposition of 1/10 s.[29] Because this notion develops within the framework of the original meaning during a hundred years of research, the definition of Attention would reflect the sense when it accounts for the main features initially attributed to this term – it is a process of controlling thought that continues over time.

The development of attention scope increases the set of faculties responsible for the mind relies on how it perceives, remembers, considers, and evaluates in making decisions.

[32] According to Latvian professor Sandra Mihailova and professor Igor Val Danilov, the more elements of the phenomenon (or phenomena ) the mind can keep in the scope of attention simultaneously, the more significant number of reasonable combinations within that event it can achieve, enhancing the probability of better understanding features and particularity of the phenomenon (phenomena).

A major driving force in the theoretical linguistic field is discovering the nature that language must have in the abstract in order to be learned in such a fashion.

All the above approaches tend either to be generalized to the form of integrated computational models of a synthetic/abstract intelligence (i.e. cognitive architecture) in order to be applied to the explanation and improvement of individual and social/organizational decision-making and reasoning[49][50] or to focus on single simulative programs (or microtheories/"middle-range" theories) modelling specific cognitive faculties (e.g. vision, language, categorization etc.).

It has made progress in understanding how damage to particular areas of the brain affect cognition, and it has helped to uncover the root causes and results of specific dysfunction, such as dyslexia, anopsia, and hemispatial neglect.

Within philosophy, some familiar names include Daniel Dennett, who writes from a computational systems perspective,[70] John Searle, known for his controversial Chinese room argument,[71] and Jerry Fodor, who advocates functionalism.

[72] Others include David Chalmers, who advocates Dualism and is also known for articulating the hard problem of consciousness, and Douglas Hofstadter, famous for writing Gödel, Escher, Bach, which questions the nature of words and thought.

Popular names in the discipline of psychology include George A. Miller, James McClelland, Philip Johnson-Laird, Lawrence Barsalou, Vittorio Guidano, Howard Gardner and Steven Pinker.

Christopher Longuet-Higgins has defined it as "the construction of formal models of the processes (perceptual, intellectual, and linguistic) by which knowledge and understanding are achieved and communicated.

This requires integrative mechanisms explaining how the information processing that occurs simultaneously in spatially segregated (sub-)cortical areas in the brain is coordinated and bound together to give rise to coherent perceptual and symbolic representations.

In language cognition the problem is to explain how semantic concepts and syntactic roles can be dynamically bound together or can be integrated to complex cognitive representations like systematic and compositional symbol structures and propositions by means of a synchronization mechanism ("variable binding") (see also the "Symbolism vs. connectionism debate" in connectionism).

From the different perspectives noted above, this problem can be reduced to the issue of how organisms at the simple reflexes stage of development overcome the threshold of the environmental chaos of sensory stimuli: electromagnetic waves, chemical interactions, and pressure fluctuations.

[86] The so-called Primary Data Entry (PDE) thesis poses doubts about the ability of such an organism to overcome this cue threshold on its own.

[87] It argues that the temporal (phase) synchronization of neural activity based on dynamical self-organizing processes in neural networks, any dynamical bound together or integration to a representation of the perceptual object by means of a synchronization mechanism can not help organisms in distinguishing relevant cue (informative stimulus) for overcome this noise threshold.