Comparison sort

A comparison sort is a type of sorting algorithm that only reads the list elements through a single abstract comparison operation (often a "less than or equal to" operator or a three-way comparison) that determines which of two elements should occur first in the final sorted list.

The only requirement is that the operator forms a total preorder over the data, with: It is possible that both a ≤ b and b ≤ a; in this case either may come first in the sorted list.

This is the case when the order between a and b can be derived via the transitive closure of these prior comparison outcomes.

Hence in a time analysis the number of executed comparisons is used to determine upper bound estimates for the number of executed basic operations such as swaps or assignments.

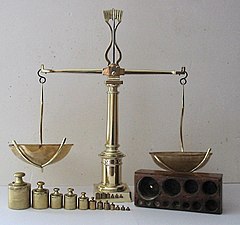

[1] A metaphor for thinking about comparison sorts is that someone has a set of unlabelled weights and a balance scale.

This is a consequence of the limited information available through comparisons alone — or, to put it differently, of the vague algebraic structure of totally ordered sets.

In this sense, mergesort, heapsort, and introsort are asymptotically optimal in terms of the number of comparisons they must perform, although this metric neglects other operations.

Non-comparison sorts (such as the examples discussed below) can achieve O(n) performance by using operations other than comparisons, allowing them to sidestep this lower bound (assuming elements are constant-sized).

The Ω(n log n) lower bound applies only to the case in which the input list can be in any possible order.

For example, reversing the result of the comparison function allows the list to be sorted in reverse; and one can sort a list of tuples in lexicographic order by just creating a comparison function that compares each part in sequence: Comparison sorts generally adapt more easily to complex orders such as the order of floating-point numbers.

When the keys form a small (compared to n) range, counting sort is an example algorithm that runs in linear time.

Given a list of distinct numbers (we can assume this because this is a worst-case analysis), there are

factorial permutations exactly one of which is the list in sorted order.

The sort algorithm must gain enough information from the comparisons to identify the correct permutation.

The above argument provides an absolute, rather than only asymptotic lower bound on the number of comparisons, namely

This lower bound is fairly good (it can be approached within a linear tolerance by a simple merge sort), but it is known to be inexact.

, but the minimal number of comparisons to sort 13 elements has been proved to be 34.

Determining the exact number of comparisons needed to sort a given number of entries is a computationally hard problem even for small n, and no simple formula for the solution is known.

A similar bound applies to the average number of comparisons.

Assuming that it is impossible to determine which order the input is in with fewer than log2(n!)

Since a comparison can give only two results, the maximum amount of information it provides is 1 bit.

To perform the sort, complete information is needed, so the remaining entropy must be 0.

To unearth what happens while analyzing the average case, the key is that what does 'average' refer to?

With some knowledge of information theory, the information-theoretic lower bound averages over the set of all permutations as a whole.

But any computer algorithms (under what are believed currently) must treat each permutation as an individual instance of the problem.

To search for the lower bound relating to the non-achievability of computers, we adopt the Decision tree model.

The minimum average length of a binary tree with a given number of leaves is achieved by a balanced full binary tree, because any other binary tree can have its path length reduced by moving a pair of leaves to a higher position.

With some careful calculations, for a balanced full binary tree with

In the case that multiple items may have the same key, there is no obvious statistical interpretation for the term "average case", so an argument like the above cannot be applied without making specific assumptions about the distribution of keys.

An adaptive sort takes advantage of this "presortedness" and runs more quickly on nearly-sorted inputs, often while still maintaining an