Computational complexity of matrix multiplication

Directly applying the mathematical definition of matrix multiplication gives an algorithm that requires n3 field operations to multiply two n × n matrices over that field (Θ(n3) in big O notation).

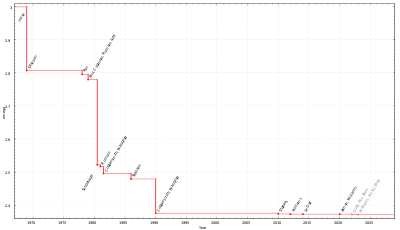

[1] The optimal number of field operations needed to multiply two square n × n matrices up to constant factors is still unknown.

As of January 2024[update], the best bound on the asymptotic complexity of a matrix multiplication algorithm is O(n2.371339).

[2] However, this and similar improvements to Strassen are not used in practice, because they are galactic algorithms: the constant coefficient hidden by the big O notation is so large that they are only worthwhile for matrices that are too large to handle on present-day computers.

[3][4] If A, B are n × n matrices over a field, then their product AB is also an n × n matrix over that field, defined entrywise as The simplest approach to computing the product of two n × n matrices A and B is to compute the arithmetic expressions coming from the definition of matrix multiplication.

, in a model of computation where field operations (addition and multiplication) take constant time (in practice, this is the case for floating point numbers, but not necessarily for integers).

Strassen's algorithm improves on naive matrix multiplication through a divide-and-conquer approach.

The numerical stability is reduced compared to the naive algorithm,[5] but it is faster in cases where n > 100 or so[6] and appears in several libraries, such as BLAS.

[8] It is very useful for large matrices over exact domains such as finite fields, where numerical stability is not an issue.

The matrix multiplication exponent, usually denoted ω, is the smallest real number for which any two

This notation is commonly used in algorithms research, so that algorithms using matrix multiplication as a subroutine have bounds on running time that can update as bounds on ω improve.

Whether ω = 2 is a major open question in theoretical computer science, and there is a line of research developing matrix multiplication algorithms to get improved bounds on ω.

The laser method has limitations to its power: Ambainis, Filmus and Le Gall prove that it cannot be used to show that ω < 2.3725 by analyzing higher and higher tensor powers of a certain identity of Coppersmith and Winograd and neither ω < 2.3078 for a wide class of variants of this approach.

[25] In 2022 Duan, Wu and Zhou devised a variant breaking the first of the two barriers with ω < 2.37188,[22] they do so by identifying a source of potential optimization in the laser method termed combination loss for which they compensate using an asymmetric version of the hashing method in the Coppersmith–Winograd algorithm.

[26][27] Henry Cohn, Robert Kleinberg, Balázs Szegedy and Chris Umans put methods such as the Strassen and Coppersmith–Winograd algorithms in an entirely different group-theoretic context, by utilising triples of subsets of finite groups which satisfy a disjointness property called the triple product property (TPP).

They also give conjectures that, if true, would imply that there are matrix multiplication algorithms with essentially quadratic complexity.

This implies that the optimal exponent of matrix multiplication is 2, which most researchers believe is indeed the case.

[4] One such conjecture is that families of wreath products of Abelian groups with symmetric groups realise families of subset triples with a simultaneous version of the TPP.

[28][29] Several of their conjectures have since been disproven by Blasiak, Cohn, Church, Grochow, Naslund, Sawin, and Umans using the Slice Rank method.

The best known lower bound for matrix-multiplication complexity is Ω(n2 log(n)), for bounded coefficient arithmetic circuits over the real or complex numbers, and is due to Ran Raz.

In other words, under the model of computation typically studied, there is no matrix multiplication algorithm that uses precisely O(nω) operations; there must be an additional factor of no(1).

A result in algebraic complexity states that multiplying matrices of size

requires the same number of arithmetic operations as multiplying matrices of size

Like the matrix multiplication exponent, the dual matrix multiplication exponent sometimes appears in the complexity of algorithms in numerical linear algebra and optimization.

The starting point of Strassen's proof is using block matrix multiplication.

Specifically, a matrix of even dimension 2n×2n may be partitioned in four n×n blocks Under this form, its inverse is provided that A and

It follows that, denoting respectively by I(n), M(n) and A(n) = n2 the number of operations needed for inverting, multiplying and adding n×n matrices, one has If

one gets eventually for some constant d. For matrices whose dimension is not a power of two, the same complexity is reached by increasing the dimension of the matrix to a power of two, by padding the matrix with rows and columns whose entries are 1 on the diagonal and 0 elsewhere.

This complexity is thus proved for almost all matrices, as a matrix with randomly chosen entries is invertible with probability one.

The argument applies also for the determinant, since it results from the block LU decomposition that Related to the problem of minimizing the number of arithmetic operations is minimizing the number of multiplications, which is typically a more costly operation than addition.