Matrix multiplication

Matrix multiplication is thus a basic tool of linear algebra, and as such has numerous applications in many areas of mathematics, as well as in applied mathematics, statistics, physics, economics, and engineering.

This article will use the following notational conventions: matrices are represented by capital letters in bold, e.g. A; vectors in lowercase bold, e.g. a; and entries of vectors and matrices are italic (they are numbers from a field), e.g. A and a.

Index notation is often the clearest way to express definitions, and is used as standard in the literature.

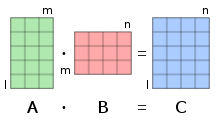

Thus the product AB is defined if and only if the number of columns in A equals the number of rows in B,[1] in this case n. In most scenarios, the entries are numbers, but they may be any kind of mathematical objects for which an addition and a multiplication are defined, that are associative, and such that the addition is commutative, and the multiplication is distributive with respect to the addition.

matrix Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.")

Historically, matrix multiplication has been introduced for facilitating and clarifying computations in linear algebra.

This strong relationship between matrix multiplication and linear algebra remains fundamental in all mathematics, as well as in physics, chemistry, engineering and computer science.

A straightforward computation shows that the matrix of the composite map

) that defines the function composition is instanced here as a specific case of associativity of matrix product (see § Associativity below): Using a Cartesian coordinate system in a Euclidean plane, the rotation by an angle

[9] The general form of a system of linear equations is Using same notation as above, such a system is equivalent with the single matrix equation The dot product of two column vectors is the unique entry of the matrix product where

One special case where commutativity does occur is when D and E are two (square) diagonal matrices (of the same size); then DE = ED.

[10] Again, if the matrices are over a general ring rather than a field, the corresponding entries in each must also commute with each other for this to hold.

are obtained by left or right multiplying all entries of A by c. If the scalars have the commutative property, then

This extends naturally to the product of any number of matrices provided that the dimensions match.

That is, if A1, A2, ..., An are matrices such that the number of columns of Ai equals the number of rows of Ai + 1 for i = 1, ..., n – 1, then the product is defined and does not depend on the order of the multiplications, if the order of the matrices is kept fixed.

These properties may be proved by straightforward but complicated summation manipulations.

This result also follows from the fact that matrices represent linear maps.

Algorithms have been designed for choosing the best order of products; see Matrix chain multiplication.

When the number n of matrices increases, it has been shown that the choice of the best order has a complexity of

the set of n×n square matrices with entries in a ring R, which, in practice, is often a field.

Nevertheless, if R is commutative, AB and BA have the same trace, the same characteristic polynomial, and the same eigenvalues with the same multiplicities.

One may raise a square matrix to any nonnegative integer power multiplying it by itself repeatedly in the same way as for ordinary numbers.

That is, Computing the kth power of a matrix needs k – 1 times the time of a single matrix multiplication, if it is done with the trivial algorithm (repeated multiplication).

As this may be very time consuming, one generally prefers using exponentiation by squaring, which requires less than 2 log2 k matrix multiplications, and is therefore much more efficient.

Since the product of diagonal matrices amounts to simply multiplying corresponding diagonal elements together, the kth power of a diagonal matrix is obtained by raising the entries to the power k: The definition of matrix product requires that the entries belong to a semiring, and does not require multiplication of elements of the semiring to be commutative.

In many applications, the matrix elements belong to a field, although the tropical semiring is also a common choice for graph shortest path problems.

[15] Even in the case of matrices over fields, the product is not commutative in general, although it is associative and is distributive over matrix addition.

The objects are the natural numbers that measure the size of matrices, and the composition of morphisms is matrix multiplication.

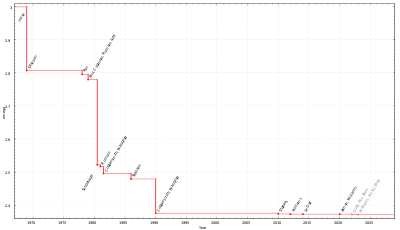

The matrix multiplication algorithm that results from the definition requires, in the worst case,

[17] As of January 2024[update], the best peer-reviewed matrix multiplication algorithm is by Virginia Vassilevska Williams, Yinzhan Xu, Zixuan Xu, and Renfei Zhou and has complexity O(n2.371552).