Conceptual clustering

Conceptual clustering is a machine learning paradigm for unsupervised classification that has been defined by Ryszard S. Michalski in 1980 (Fisher 1987, Michalski 1980) and developed mainly during the 1980s.

Most conceptual clustering methods are capable of generating hierarchical category structures; see Categorization for more information on hierarchy.

Conceptual clustering is closely related to formal concept analysis, decision tree learning, and mixture model learning.

Thus, a statistically strong grouping in the data may fail to be extracted by the learner if the prevailing concept description language is incapable of describing that particular regularity.

A fair number of algorithms have been proposed for conceptual clustering.

Some examples are given below: More general discussions and reviews of conceptual clustering can be found in the following publications: This section discusses the rudiments of the conceptual clustering algorithm COBWEB.

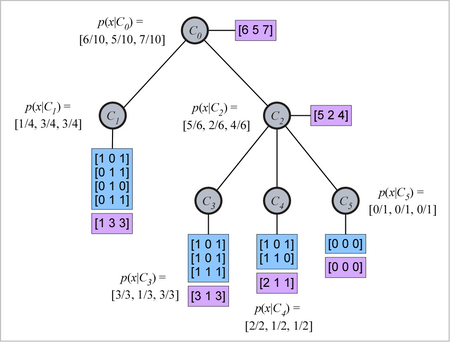

The COBWEB data structure is a hierarchy (tree) wherein each node represents a given concept.

The concept description is the category-conditional probability (likelihood) of the properties at the node.

is the root concept, which contains all ten objects in the data set.

Note that each parent node (relative superordinate concept) contains all the objects contained by its child nodes (relative subordinate concepts).

In Fisher's (1987) description of COBWEB, he indicates that only the total attribute counts (not conditional probabilities, and not object lists) are stored at the nodes.

Any probabilities are computed from the attribute counts as needed.

However, if constraints are placed on the probability ranges which concepts may represent, then a stronger language is obtained.

For example, we might permit only concepts wherein at least one probability differs from 0.5 by more than

Thus, under constraints such as these, we obtain something like a traditional concept language.

In Fisher's (1987) description of COBWEB, the measure he uses to evaluate the quality of the hierarchy is Gluck and Corter's (1985) category utility (CU) measure, which he re-derives in his paper.

It has previously been shown that the CU for feature-based classification is the same as the mutual information between the feature variables and the class variable (Gluck & Corter, 1985; Corter & Gluck, 1992), and since this measure is much better known, we proceed here with mutual information as the measure of category "goodness".

What we wish to evaluate is the overall utility of grouping the objects into a particular hierarchical categorization structure.

Given a set of possible classification structures, we need to determine whether one is better than another.