Consistent hashing

In contrast, in most traditional hash tables, a change in the number of array slots causes nearly all keys to be remapped because the mapping between the keys and the slots is defined by a modular operation.

[3] The term "consistent hashing" was introduced by David Karger et al. at MIT for use in distributed caching, particularly for the web.

[4] This academic paper from 1997 in Symposium on Theory of Computing introduced the term "consistent hashing" as a way of distributing requests among a changing population of web servers.

The authors mention linear hashing and its ability to handle sequential server addition and removal, while consistent hashing allows servers to be added and removed in an arbitrary order.

[1] The paper was later re-purposed to address technical challenge of keeping track of a file in peer-to-peer networks such as a distributed hash table.

[6][7] Teradata used this technique in their distributed database[citation needed], released in 1986, although they did not use this term.

Teradata still uses the concept of a hash table to fulfill exactly this purpose.

Akamai Technologies was founded in 1998 by the scientists Daniel Lewin and F. Thomson Leighton (co-authors of the article coining "consistent hashing").

In Akamai's content delivery network,[8] consistent hashing is used to balance the load within a cluster of servers, while a stable marriage algorithm is used to balance load across clusters.

[2] Consistent hashing has also been used to reduce the impact of partial system failures in large web applications to provide robust caching without incurring the system-wide fallout of a failure.

[9] Consistent hashing is also the cornerstone of distributed hash tables (DHTs), which employ hash values to partition a keyspace across a distributed set of nodes, then construct an overlay network of connected nodes that provide efficient node retrieval by key.

Rendezvous hashing, designed in 1996, is a simpler and more general technique [citation needed].

It achieves the goals of consistent hashing using the very different highest random weight (HRW) algorithm.

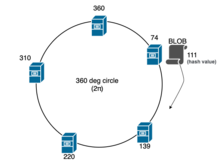

In the problem of load balancing, for example, when a BLOB has to be assigned to one of

changes), all the BLOBs in every server should be reassigned and moved due to rehashing, but this operation is expensive.

Consistent hashing was designed to avoid the problem of having to reassign every BLOB when a server is added or removed throughout the cluster.

The central idea is to use a hash function that maps both the BLOB and servers to a unit circle, usually

is hash of a BLOB or server's identifier, like IP address or UUID).

Each BLOB is then assigned to the next server that appears on the circle in clockwise order.

complexities respectively; and in every iteration, which happens in clockwise manner, an operation

In practice, a binary search tree (BST) is used to dynamically maintain the

within a cluster or hashring, and to find the successor or minimum within the BST, tree traversal is used.

[14] A number of extensions to the basic technique are needed for effectively using consistent hashing for load balancing in practice.

Another extension concerns a situation where a single BLOB gets "hot" and is accessed a large number of times and will have to be hosted in multiple servers.

In this situation, the BLOB may be assigned to multiple contiguous servers by traversing the unit circle in clockwise order.

A more complex practical consideration arises when two BLOBs are hashed near each other in the unit circle and both get "hot" at the same time.

In this case, both BLOBs will use the same set of contiguous servers in the unit circle.

This situation can be ameliorated by each BLOB choosing a different hash function for mapping servers to the unit circle.

[2] Rendezvous hashing, designed in 1996, is a simpler and more general technique, and permits fully distributed agreement on a set of

[citation needed] Known examples of consistent hashing use include: