Continuous-time Markov chain

is as follows: the process makes a transition after the amount of time specified by the holding time—an exponential random variable

When a transition is to be made, the process moves according to the jump chain, a discrete-time Markov chain with stochastic matrix: Equivalently, by the property of competing exponentials, this CTMC changes state from state i according to the minimum of two random variables, which are independent and such that

A CTMC satisfies the Markov property, that its behavior depends only on its current state and not on its past behavior, due to the memorylessness of the exponential distribution and of discrete-time Markov chains.

with the discrete metric, so that we can make sense of right continuity of functions

A continuous-time Markov chain is defined by:[1] Note that the row sums of

: via transition probabilities or via the jump chain and holding times.

to specify the dynamics of the Markov chain by means of generating a collection of transition matrices

Theorem: Existence of solution to Kolmogorov backward equations.

is regular to mean that we do have uniqueness for the above system, i.e., that there exists exactly one solution.

is infinite, and there exist irregular transition-rate matrices on

is regular from the beginning of the following subsection up through the end of this section, even though it is conventional[10][11][12] to not include this assumption.

is almost surely right continuous (with respect to the discrete metric on

is a discrete-time Markov chain with initial distribution

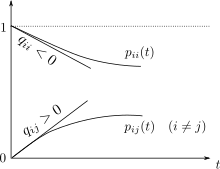

Write P(t) for the matrix with entries pij = P(Xt = j | X0 = i).

Then the matrix P(t) satisfies the forward equation, a first-order differential equation where the prime denotes differentiation with respect to t. The solution to this equation is given by a matrix exponential In a simple case such as a CTMC on the state space {1,2}.

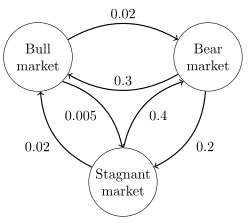

The row vector π may be found by solving with the constraint The image to the right describes a continuous-time Markov chain with state-space {Bull market, Bear market, Stagnant market} and transition-rate matrix The stationary distribution of this chain can be found by solving

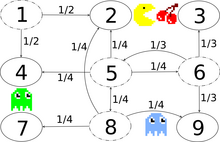

, subject to the constraint that elements must sum to 1 to obtain The image to the right describes a discrete-time Markov chain modeling Pac-Man with state-space {1,2,3,4,5,6,7,8,9}.

The player controls Pac-Man through a maze, eating pac-dots.

For convenience, the maze shall be a small 3x3-grid and the ghosts move randomly in horizontal and vertical directions.

Entries with probability zero are removed in the following transition-rate matrix:

Therefore, a unique stationary distribution exists and can be found by solving

The solution of this linear equation subject to the constraint is

For a CTMC Xt, the time-reversed process is defined to be

Kolmogorov's criterion states that the necessary and sufficient condition for a process to be reversible is that the product of transition rates around a closed loop must be the same in both directions.

One method of finding the stationary probability distribution, π, of an ergodic continuous-time Markov chain, Q, is by first finding its embedded Markov chain (EMC).

Strictly speaking, the EMC is a regular discrete-time Markov chain.

Each element of the one-step transition probability matrix of the EMC, S, is denoted by sij, and represents the conditional probability of transitioning from state i into state j.

Once π is found, it must be normalized to a unit vector.)

Another discrete-time process that may be derived from a continuous-time Markov chain is a δ-skeleton—the (discrete-time) Markov chain formed by observing X(t) at intervals of δ units of time.

The random variables X(0), X(δ), X(2δ), ... give the sequence of states visited by the δ-skeleton.