Covariance matrix

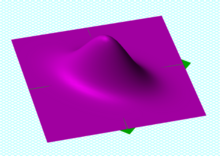

Intuitively, the covariance matrix generalizes the notion of variance to multiple dimensions.

As an example, the variation in a collection of random points in two-dimensional space cannot be characterized fully by a single number, nor would the variances in the

are random variables, each with finite variance and expected value, then the covariance matrix

Some statisticians, following the probabilist William Feller in his two-volume book An Introduction to Probability Theory and Its Applications,[2] call the matrix

is also often called the variance-covariance matrix, since the diagonal terms are in fact variances.

, suitable for post-multiplying a row vector of explanatory variables

In this form they correspond to the coefficients obtained by inverting the matrix of the normal equations of ordinary least squares (OLS).

A covariance matrix with all non-zero elements tells us that all the individual random variables are interrelated.

Treated as a bilinear form, it yields the covariance between the two linear combinations:

, which induces the Mahalanobis distance, a measure of the "unlikelihood" of c.[citation needed] From basic property 4. above, let

The variance of a complex scalar-valued random variable with expected value

; thus the variance of a complex random variable is a real number.

is a column vector of complex-valued random variables, then the conjugate transpose

From it a transformation matrix can be derived, called a whitening transformation, that allows one to completely decorrelate the data [10] or, from a different point of view, to find an optimal basis for representing the data in a compact way[citation needed] (see Rayleigh quotient for a formal proof and additional properties of covariance matrices).

This is called principal component analysis (PCA) and the Karhunen–Loève transform (KL-transform).

The covariance matrix plays a key role in financial economics, especially in portfolio theory and its mutual fund separation theorem and in the capital asset pricing model.

The matrix of covariances among various assets' returns is used to determine, under certain assumptions, the relative amounts of different assets that investors should (in a normative analysis) or are predicted to (in a positive analysis) choose to hold in a context of diversification.

The evolution strategy, a particular family of Randomized Search Heuristics, fundamentally relies on a covariance matrix in its mechanism.

The characteristic mutation operator draws the update step from a multivariate normal distribution using an evolving covariance matrix.

There is a formal proof that the evolution strategy's covariance matrix adapts to the inverse of the Hessian matrix of the search landscape, up to a scalar factor and small random fluctuations (proven for a single-parent strategy and a static model, as the population size increases, relying on the quadratic approximation).

[11] Intuitively, this result is supported by the rationale that the optimal covariance distribution can offer mutation steps whose equidensity probability contours match the level sets of the landscape, and so they maximize the progress rate.

is the i-th discrete value in sample j of the random function

The expected values needed in the covariance formula are estimated using the sample mean, e.g.

where the angular brackets denote sample averaging as before except that the Bessel's correction should be made to avoid bias.

1 illustrates how a partial covariance map is constructed on an example of an experiment performed at the FLASH free-electron laser in Hamburg.

is the time-of-flight spectrum of ions from a Coulomb explosion of nitrogen molecules multiply ionised by a laser pulse.

Since only a few hundreds of molecules are ionised at each laser pulse, the single-shot spectra are highly fluctuating.

reveals several nitrogen ions in a form of peaks broadened by their kinetic energy, but to find the correlations between the ionisation stages and the ion momenta requires calculating a covariance map.

Yet in practice it is often sufficient to overcompensate the partial covariance correction as panel f shows, where interesting correlations of ion momenta are now clearly visible as straight lines centred on ionisation stages of atomic nitrogen.

Two-dimensional infrared spectroscopy employs correlation analysis to obtain 2D spectra of the condensed phase.