Discrete-time Markov chain

The process continues forever, indexed by the natural numbers.

From this matrix, the probability of being in a particular state n steps in the future can be calculated.

Markov chains can have properties including periodicity, reversibility and stationarity.

A continuous-time Markov chain is like a discrete-time Markov chain, but it moves states continuously through time rather than as discrete time steps.

Other stochastic processes can satisfy the Markov property, the property that past behavior does not affect the process, only the present state.

A discrete-time Markov chain is a sequence of random variables

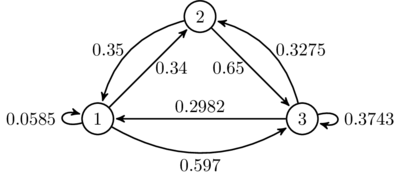

[1] Markov chains are often described by a sequence of directed graphs, where the edges of graph n are labeled by the probabilities of going from one state at time n to the other states at time n + 1,

However, Markov chains are frequently assumed to be time-homogeneous (see variations below), in which case the graph and matrix are independent of n and are thus not presented as sequences.

These descriptions highlight the structure of the Markov chain that is independent of the initial distribution

of the state space as input, or as the behavior of the machine with the initial distribution

[citation needed] The probability of going from state i to state j in n time steps is and the single-step transition is For a time-homogeneous Markov chain: and The n-step transition probabilities satisfy the Chapman–Kolmogorov equation, that for any k such that 0 < k < n, where S is the state space of the Markov chain.

The evolution of the process through one time step is described by (The superscript (n) is an index, and not an exponent).

[1] The set of communicating classes forms a directed, acyclic graph by inheriting the arrows from the original state space.

A communicating class is closed if and only if it has no outgoing arrows in this graph.

is the greatest common divisor) provided that this set is not empty.

[1] A state i is recurrent if and only if the expected number of visits to i is infinite:[1] Even if the hitting time is finite with probability 1, it need not have a finite expectation.

The mean recurrence time at state i is the expected return time Mi: State i is positive recurrent (or non-null persistent) if Mi is finite; otherwise, state i is null recurrent (or null persistent).

Considering a fixed arbitrary time n and using the shorthand the detailed balance equation can be written more compactly as The single time-step from n to n + 1 can be thought of as each person i having πi dollars initially and paying each person j a fraction pij of it.

The detailed balance condition states that upon each payment, the other person pays exactly the same amount of money back.

The assumption is a technical one, because the money not really used is simply thought of as being paid from person j to himself (that is, pjj is not necessarily zero).

As n was arbitrary, this reasoning holds for any n, and therefore for reversible Markov chains π is always a steady-state distribution of Pr(Xn+1 = j | Xn = i) for every n. If the Markov chain begins in the steady-state distribution, that is, if

Kolmogorov's criterion gives a necessary and sufficient condition for a Markov chain to be reversible directly from the transition matrix probabilities.

Reversible Markov chains are common in Markov chain Monte Carlo (MCMC) approaches because the detailed balance equation for a desired distribution π necessarily implies that the Markov chain has been constructed so that π is a steady-state distribution.

Even with time-inhomogeneous Markov chains, where multiple transition matrices are used, if each such transition matrix exhibits detailed balance with the desired π distribution, this necessarily implies that π is a steady-state distribution of the Markov chain.

According to the Frobenius Norm the closest reversible Markov chain according to

, then the closest reversible Markov chain according to the Frobenius norm is approximately given by A distribution

is a stationary distribution of the Markov chain with stochastic matrix

does exist for every integer r. A Markov chain need not necessarily be time-homogeneous to have an equilibrium distribution.

Such can occur in Markov chain Monte Carlo (MCMC) methods in situations where a number of different transition matrices are used, because each is efficient for a particular kind of mixing, but each matrix respects a shared equilibrium distribution.

[citation needed] For a subset of states A ⊆ S, the vector kA of hitting times (where element