Random variable

[1] The term 'random variable' in its mathematical definition refers to neither randomness nor variability[2] but instead is a mathematical function in which Informally, randomness typically represents some fundamental element of chance, such as in the roll of a die; it may also represent uncertainty, such as measurement error.

The purely mathematical analysis of random variables is independent of such interpretational difficulties, and can be based upon a rigorous axiomatic setup.

It is possible for two random variables to have identical distributions but to differ in significant ways; for instance, they may be independent.

According to George Mackey, Pafnuty Chebyshev was the first person "to think systematically in terms of random variables".

The term "random variable" in statistics is traditionally limited to the real-valued case (

This more general concept of a random element is particularly useful in disciplines such as graph theory, machine learning, natural language processing, and other fields in discrete mathematics and computer science, where one is often interested in modeling the random variation of non-numerical data structures.

that assigns measure 1 to the whole real line, i.e., one works with probability distributions instead of random variables.

Mathematically, the random variable is interpreted as a function which maps the person to their height.

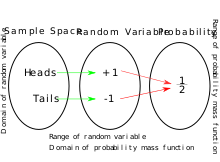

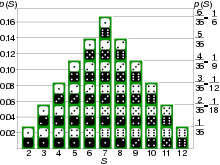

A random variable can also be used to describe the process of rolling dice and the possible outcomes.

Instead, continuous random variables almost never take an exact prescribed value c (formally,

An example of a continuous random variable would be one based on a spinner that can choose a horizontal direction.

However, it is commonly more convenient to map the sample space to a random variable which takes values which are real numbers.

The random variable then takes values which are real numbers from the interval [0, 360), with all parts of the range being "equally likely".

This exploits properties of cumulative distribution functions, which are a unifying framework for all random variables.

The most formal, axiomatic definition of a random variable involves measure theory.

Normally, a particular such sigma-algebra is used, the Borel σ-algebra, which allows for probabilities to be defined over any sets that can be derived either directly from continuous intervals of numbers or by a finite or countably infinite number of unions and/or intersections of such intervals.

The probability distribution of a random variable is often characterised by a small number of parameters, which also have a practical interpretation.

This is captured by the mathematical concept of expected value of a random variable, denoted

typically are, a question that is answered by the variance and standard deviation of a random variable.

can be viewed intuitively as an average obtained from an infinite population, the members of which are particular evaluations of

For example, for a categorical random variable X that can take on the nominal values "red", "blue" or "green", the real-valued function

A new random variable Y can be defined by applying a real Borel measurable function

's inverse function) and is either increasing or decreasing, then the previous relation can be extended to obtain With the same hypotheses of invertibility of

Differently from the previous example, in this case however, there is no symmetry and we have to compute the two distinct terms: The inverse transformation is and its derivative is Then, This is a noncentral chi-squared distribution with one degree of freedom.

If the sample space is a subset of the real line, random variables X and Y are equal in distribution (denoted

This provides, for example, a useful method of checking equality of certain functions of independent, identically distributed (IID) random variables.

However, the moment generating function exists only for distributions that have a defined Laplace transform.

It is associated to the following distance: where "ess sup" represents the essential supremum in the sense of measure theory.

Finally, the two random variables X and Y are equal if they are equal as functions on their measurable space: This notion is typically the least useful in probability theory because in practice and in theory, the underlying measure space of the experiment is rarely explicitly characterized or even characterizable.

A significant theme in mathematical statistics consists of obtaining convergence results for certain sequences of random variables; for instance the law of large numbers and the central limit theorem.