Earthquake prediction

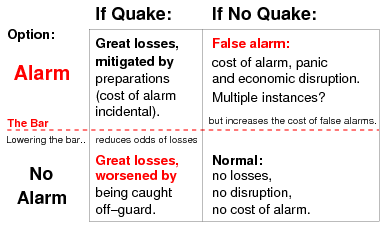

[14] As the purpose of short-term prediction is to enable emergency measures to reduce death and destruction, failure to give warning of a major earthquake, that does occur, or at least an adequate evaluation of the hazard, can result in legal liability, or even political purging.

[18] In 1999 it was reported[19] that China was introducing "tough regulations intended to stamp out 'false' earthquake warnings, in order to prevent panic and mass evacuation of cities triggered by forecasts of major tremors."

[31] After a critical review of the scientific literature, the International Commission on Earthquake Forecasting for Civil Protection (ICEF) concluded in 2011 there was "considerable room for methodological improvements in this type of research.

[35][36] Seismometers can also detect P waves, and the timing difference is exploited by electronic earthquake warning systems to provide humans with a few seconds to move to a safer location.

A review of scientific studies available as of 2018 covering over 130 species found insufficient evidence to show that animals could provide warning of earthquakes hours, days, or weeks in advance.

[37] Statistical correlations suggest some reported unusual animal behavior is due to smaller earthquakes (foreshocks) that sometimes precede a large quake,[38] which if small enough may go unnoticed by people.

[40] Even the vast majority of scientific reports in the 2018 review did not include observations showing that animals did not act unusually when there was not an earthquake about to happen, meaning the behavior was not established to be predictive.

Detection of variations in the relative velocities of the primary and secondary seismic waves – expressed as Vp/Vs – as they passed through a certain zone was the basis for predicting the 1973 Blue Mountain Lake (NY) and 1974 Riverside (CA) quake.

A waveguide for low HF radio frequencies up to 10 MHz is formed during the night (skywave propagation) as the F layer reflects these waves back to the Earth.

[109] In 2017, an article in the Journal of Geophysical Research showed that the relationship between ionospheric anomalies and large seismic events (M≥6.0) occurring globally from 2000 to 2014 was based on the presence of solar weather.

[110] A subsequent article in Physics of the Earth and Planetary Interiors in 2020 shows that solar weather and ionospheric disturbances are a potential cause to trigger large earthquakes based on this statistical relationship.

During the evaluation process, the background of daily variation and noise due to atmospheric disturbances and human activities are removed before visualizing the concentration of trends in the wider area of a fault.

[112][113][114][115] In a newer approach to explain the phenomenon, NASA's Friedmann Freund has proposed that the infrared radiation captured by the satellites is not due to a real increase in the surface temperature of the crust.

According to this version the emission is a result of the quantum excitation that occurs at the chemical re-bonding of positive charge carriers (holes) which are traveling from the deepest layers to the surface of the crust at a speed of 200 meters per second.

Since continuous plate motions cause the strain to accumulate steadily, seismic activity on a given segment should be dominated by earthquakes of similar characteristics that recur at somewhat regular intervals.

[131] For a given fault segment, identifying these characteristic earthquakes and timing their recurrence rate (or conversely return period) should therefore inform us about the next rupture; this is the approach generally used in forecasting seismic hazard.

[134] The appeal of such a method is that the prediction is derived entirely from the trend, which supposedly accounts for the unknown and possibly unknowable earthquake physics and fault parameters.

This seriously undercuts the claim that earthquakes at Parkfield are quasi-periodic, and suggests the individual events differ sufficiently in other respects to question whether they have distinct characteristics in common.

Rouet-Leduc et al. (2019) suggested that the identified signal, previously assumed to be statistical noise, reflects the increasing emission of energy before its sudden release during a slip event.

Using the Theano and TensorFlow software libraries, DeVries et al. (2018) trained a neural network that achieved higher accuracy in the prediction of spatial distributions of earthquake aftershocks than the previously established methodology of Coulomb failure stress change.

Notably, DeVries et al. (2018) reported that their model made no "assumptions about receiver plane orientation or geometry" and heavily weighted the change in shear stress, "sum of the absolute values of the independent components of the stress-change tensor," and the von Mises yield criterion.

They found in a review of literature that earthquake prediction research utilizing artificial neural networks has gravitated towards more sophisticated models amidst increased interest in the area.

This event occurred during the Cultural Revolution, when "belief in earthquake prediction was made an element of ideological orthodoxy that distinguished the true party liners from right wing deviationists".

"[178] Unfazed,[s] Brady subsequently revised his forecast, stating there would be at least three earthquakes on or about July 6, August 18 and September 24, 1981,[180] leading one USGS official to complain: "If he is allowed to continue to play this game ... he will eventually get a hit and his theories will be considered valid by many.

[ad] Coupled with indeterminate time windows of a month or more,[222] such predictions "cannot be practically utilized"[223] to determine an appropriate level of preparedness, whether to curtail usual societal functioning, or even to issue public warnings.

[af] He supported the idea (scientifically unproven) that volcanoes and earthquakes are more likely to be triggered when the tidal force of the Sun and the Moon coincide to exert maximum stress on the Earth's crust (syzygy).

An area he mentioned frequently was the New Madrid seismic zone at the southeast corner of the state of Missouri, the site of three very large earthquakes in 1811–12, which he coupled with the date of 3 December 1990.

Browning's prediction received the support of geophysicist David Stewart,[ai] and the tacit endorsement of many public authorities in their preparations for a major disaster, all of which was amplified by massive exposure in the news media.

The M8 algorithm (developed under the leadership of Vladimir Keilis-Borok at UCLA) gained respect by the apparently successful predictions of the 2003 San Simeon and Hokkaido earthquakes.

"[276] In 2021, a multitude of authors from a variety of universities and research institutes studying the China Seismo-Electromagnetic Satellite reported[277] that the claims based on self-organized criticality stating that at any moment any small earthquake can eventually cascade to a large event, do not stand[278] in view of the results obtained to date by natural time analysis.