Gene prediction

Gene prediction is one of the key steps in genome annotation, following sequence assembly, the filtering of non-coding regions and repeat masking.

[3] Gene prediction is closely related to the so-called 'target search problem' investigating how DNA-binding proteins (transcription factors) locate specific binding sites within the genome.

[4][5] Many aspects of structural gene prediction are based on current understanding of underlying biochemical processes in the cell such as gene transcription, translation, protein–protein interactions and regulation processes, which are subject of active research in the various omics fields such as transcriptomics, proteomics, metabolomics, and more generally structural and functional genomics.

Once candidate DNA sequences have been determined, it is a relatively straightforward algorithmic problem to efficiently search a target genome for matches, complete or partial, and exact or inexact.

A high degree of similarity to a known messenger RNA or protein product is strong evidence that a region of a target genome is a protein-coding gene.

Thus, to collect extrinsic evidence for most or all of the genes in a complex organism requires the study of many hundreds or thousands of cell types, which presents further difficulties.

Despite these difficulties, extensive transcript and protein sequence databases have been generated for human as well as other important model organisms in biology, such as mice and yeast.

For example, the RefSeq database contains transcript and protein sequence from many different species, and the Ensembl system comprehensively maps this evidence to human and several other genomes.

New high-throughput transcriptome sequencing technologies such as RNA-Seq and ChIP-sequencing open opportunities for incorporating additional extrinsic evidence into gene prediction and validation, and allow structurally rich and more accurate alternative to previous methods of measuring gene expression such as expressed sequence tag or DNA microarray.

In the genomes of prokaryotes, genes have specific and relatively well-understood promoter sequences (signals), such as the Pribnow box and transcription factor binding sites, which are easy to systematically identify.

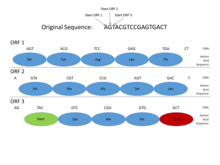

Also, the sequence coding for a protein occurs as one contiguous open reading frame (ORF), which is typically many hundred or thousands of base pairs long.

These characteristics make prokaryotic gene finding relatively straightforward, and well-designed systems are able to achieve high levels of accuracy.

Ab initio gene finding in eukaryotes, especially complex organisms like humans, is considerably more challenging for several reasons.

Two classic examples of signals identified by eukaryotic gene finders are CpG islands and binding sites for a poly(A) tail.

A typical protein-coding gene in humans might be divided into a dozen exons, each less than two hundred base pairs in length, and some as short as twenty to thirty.

Eukaryotic ab initio gene finders, by comparison, have achieved only limited success; notable examples are the GENSCAN and geneid programs.

They build a discriminative model using hidden Markov support vector machines or conditional random fields to learn an accurate gene prediction scoring function.

Neural networks are able to come up with approximate solutions to problems that are hard to solve algorithmically, provided there is sufficient training data.

Augustus, which may be used as part of the Maker pipeline, can also incorporate hints in the form of EST alignments or protein profiles to increase the accuracy of the gene prediction.

Pseudogenes are close relatives of genes, sharing very high sequence homology, but being unable to code for the same protein product.

Metagenomics tools also fall into the basic categories of using either sequence similarity approaches (MEGAN4) and ab initio techniques (GLIMMER-MG).

Glimmer-MG[24] is an extension to GLIMMER that relies mostly on an ab initio approach for gene finding and by using training sets from related organisms.